The Original Question

Inspired by the the NEOShield project, we wanted to find out: when a near-Earth object (NEO) is about to impact, what do we do? There are a few choices for mitigation measures available to us in the modern age:

1) Kinetic impactor

2) Gravity tractor

3) Blast deflection

So given these three choices, our group wanted to determine which one is simply the most cost-effective. To this end, we randomly generated two asteroids and their dimensions (using diameters 40m and 4.1km and mean known densities of asteroids, which was 4.95g/cc). It is highly unlikely to have an asteroid of 4.1 km, but we had the two asteroids on opposite ends of the spectrum in terms of size and mass so that the goal was to have the range in order to estimate what to do for any asteroids that lie in between the two extremes.

The Methods

- Kinetic Impactor

This measure uses classical Newtonian mechanics, ie. we launch an object at a very high speed into space to intercept and collide with the asteroid. The physics behind the kinetic impactor is the transfer of momentum (modeled by the formula mv = mv) from the impactor to the asteroid. The goal is not to destroy it, but to transfer momentum to the asteroid to deflect it off-course, decelerate it, or accelerate it. Any of the three options are meant to change the velocity of the asteroid so that it would narrowly miss Earth. This is one of the cleaner ways of collision mitigation, as the debris that would result from the impact would definitely be small enough to burn up as it enters Earth’s atmosphere (assuming that it does). - Gravity Tractor

This measure employs the idea of universal gravitation (modeled by the formula F = G(Mm)/r^2). The way it works is that we would send a very massive satellite into space and rely on the small gravitational force that exists between it and the asteroid to slowly (and hopefully surely) adjust the course of the asteroid to allow it to miss Earth. This is the cleanest method as it does not require any collision, thus no debris. - Blast Deflection

As the name suggests, this is the use of explosives to move the asteroid away from its collision course with Earth. Because of the size, effect, and ease of launch, we assumed that the detonation would be a nuclear one, which was also the one suggested on the NEOShield site. This method is not as clean, as debris can be affected by nuclear radiation and still be potentially big enough to pass through Earth’s atmosphere and cause damage.

Cost-Effectiveness

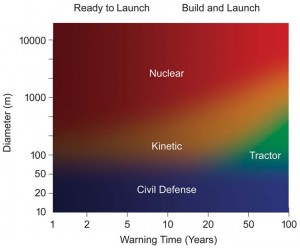

Source: the NEOShield Project

The figure above shows the amount of time that we need to know ahead given an asteroid’s size and the most likely way to prevent collision. For the fastest deployment, as suggests by the graph, the fastest would be a nuclear warhead, followed by a kinetic impactor and lastly the gravity tractor. From a cost point of view, the cost of sending each object into space would be around the same, but the cost of building each one ranks (from most expensive to cheapest): nuclear bomb, gravity tractor (which is just a satellite), and kinetic impactor (an object with a propeller). Under these criteria, the kinetic impactor is the most cost-effective with moderate time-constraints and moderate asteroid sizes. The major drawback for gravity tractors is that it takes too long for the effect to take place, and it requires an extremely accurate location of the asteroid and maneuvering of the satellite to put it in an optimal position for using gravitational force to redirect the asteroid. However, to be able to obtain the location information is far beyond any form of accurate sensor range in our current state of technology and can only yield estimates, which do not provide adequate intelligence for effective gravitation. For nuclear warheads, there is the problem of safety and post-blast repercussions. If the asteroid just happens to have a fault running through the middle (as dramatized in the film Armageddon), the blast and shockwave that comes from the bomb would cause the asteroid to split into two large pieces and create even larger destruction. For a kinetic impactor, as supported by the calculations we made in the data section, as long as the impactor is moving at a sufficient velocity (which can be achieved with ease due to the lack of friction in space), it can successfully cause an asteroid of any size to miss Earth, given enough distance and time between the Earth and the rock.

The Answer

After evaluating the three methods of preventing collision, kinetic impact is certainly the most cost-effective. It must be said that in terms of pure effectiveness, a nuclear detonation would have the most impact on the asteroid, but there are too many political implications and destructive consequences that come with nuclear explosions. For the cleanest and safest method, gravity traction is most preferable, but due to the lack of technology and accurate information, it is no doubt the least effective, not to mention the amount of time and effort needed to ensure the most effectiveness (already insignificant compared to kinetic impact and blast deflection) that it will have upon the asteroid.

What Did We Learn?

Even though preventing a world-wide catastrophe caused by an asteroid sounds like a big deal (which it really is), the science behind the idea of pushing an asteroid away is really based on simple classical physics principles (mostly Newton’s Second Law). Through this project, we were able to learn what people on Earth would have to do to avoid asteroid collisions and the amount of efforts needed in order to achieve safety. Through calculations, we learned just what magnitudes that we will be dealing with when it comes to asteroids and potential elimination of the human race. By doing this project, we also acquired skills of modeling and applying what we have learned before in physics to novel situations such as the one we wanted to investigate.

If We Were to Do This Once Again:

For the demonstration that we filmed, we would definitely change the components. The surface we used had a lot of friction (pool table) even though it gave a lot of control in terms of the direction the ball rolled in. Additionally, we would like to try to have a moving kinetic impactor representation to create a demonstration closer to the actual situation of kinetic impact on an asteroid. It would be nice to be able to use metal ball bearings on a slippery surface to represent the asteroid and impactor and minimize friction.