Describe your project

For our project, we investigated the wi-fi signal on campus. We thought it would be a good idea to find locations on campus that had good internet connection and speed. We began by selecting some of the more popular study spaces on campus such as the main library, the Retreat, the Deece, the Old Bookstore, and the Bridge Building. Our goal was to scientifically discern whether or not there exists a location on campus with the “best” wi-fi signal strength and speed. For this, we utilized two different types of equipment, a Radio Frequency meter and two smartphones. The RF meter was used to record data regarding the strength of the signal in a given location, while the smartphone app was used to measure the data rate transfer or the signal speed. We hoped if our data proves there to be a superior wi-fi signal, to convey this information to our peers in order to enhance their studying and learning experience while at Vassar.

Present your results.

What do your results mean?

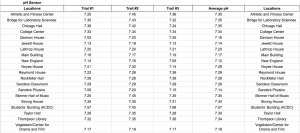

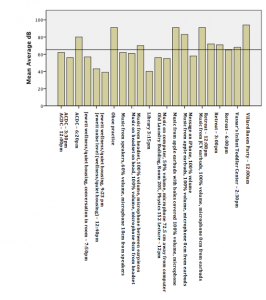

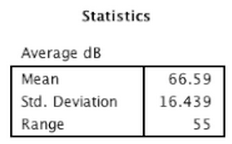

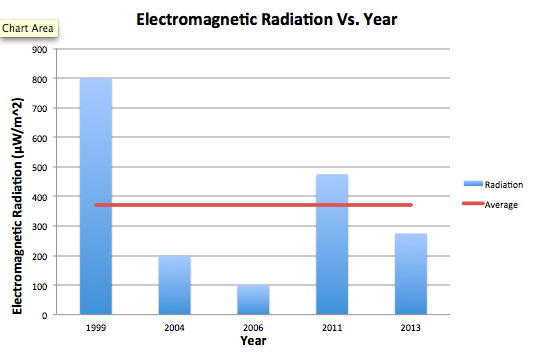

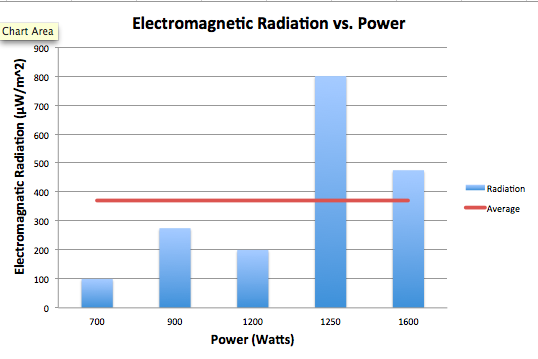

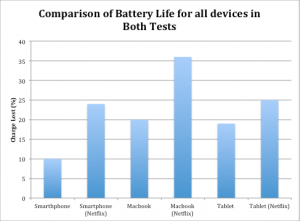

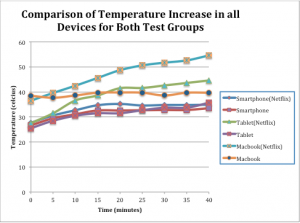

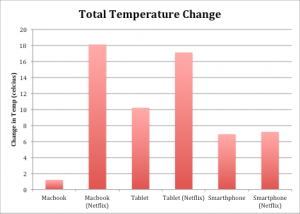

For our data, we collected two different types of data. Our first set of data consisted of collecting data based on the rate of data transfer from two different school network, Student Secure and eduroam. Using a smartphone app, data was collected in Mbps, or megabits per second, which measures the rate at which data was received by our smartphones. The locations with the highest Mbps had the fastest rates of data transfer meaning that content has the ability to load information faster than at other locations. Our data show that when connected to Student Secure three locations consistently had the highest rates of data transfer: Main Library, Library Basement, and the Deece. The data collected at these three locations when connected to Student Secure all had Mbps around 150, except for the data collected in the Library Basement over 120 seconds which had a Mbps of 107.6. Another trend that we noticed was that when connected to Student Secure, the Bridge Building had the slowest Mbps rate over all three time frames. This was especially intriguing considering that it is an academic building and one would expect it to have a fast, reliable connection but the data contradicts this. Maybe this is because the new building is new and it hasn’t gotten many updates on the wi-fi server. When analyzing the data taken when connected to the eduroam network, there were not any immediately noticeable trends. The data appears to be scattered and doesn’t seem to be any location with exceptionally fast wi-fi. The data fluctuates by location and through time frames. Not only were these the highest rate when averaged over three different time periods, but they were also considerably higher than the rest of the location. The results represent the average amount of data that was transferred over two of the popular wifi networks on campus and the strength of the radio frequency level that was emitted over two minutes. For the data collected on the RF meter, a trend persisted where the Deece wi-fi signal strength was considerably low compared to the other locations.This perhaps has something to do with a number of people that are usually at the Deece and using the networks or with the overall spacious design of the building. Other than that, all three axes had consistent signal strengths but they were very low when compared to the Library Basement signal. For all the data collected at the z-axis, the library basement had the strongest signal strength. This is interesting because the library basement had the third highest values in terms of rate. Perhaps this shows an inverse relationship between rate and signal strength. This relationship could also be explored with the data from the Old Bookstore and Retreat. The Old Bookstore and Retreat consistently had some of the lowest rates, however, once we measured the strength they surpassed the Bridge Building and Deece, which was the inverse of what we saw in terms of rate. There are many ways to interpret this data, however, none of them make conceptual sense. It would be incorrect to assume that the stronger the wifi, the slower the rate. However, this question would be better answered with more data and less variability. As seen in the graphs, there were interesting trends in fluctuations. The strengths on each axis would range from very large values to very small values as seen with the Deece. No trends can truly be drawn from the strength because it is so variable.

Were your results as predicted?

When we first started the project we expected the library basement to have the highest signal strength and data transfer, then the main library, the bridge, the library basement, the old bookstore, the retreat, and then the deece. Due to the library being the commonplace to study and do work and being built for that purpose specifically, we expected the library to have the strongest signal.Unfortunately, the data did not turn out how we predicted. For the RF meter, the data was very inconsistent throughout the various locations for the x,y, and z-axis. signal strength.Overall the library basement for the x,y, z and average all showed that the library basement had the highest signal strength. But the wifi app data greatly differed greatly from what we expected and from each other over time. For student secure, the main library and the library basement was in the top three for all three of the time frames, while for eduroam the library basement and the bridge were fairly consistently in the top two. Overall, the library basement which we expected to to be the third highest in signal strength and rate of data transfer was the relatively the highest.

What science did you learn during this project?

During this project, we used the RF meter and an app on our phone called Wi-Fi Sweetspots. The RF meter allowed us to calculate radio frequency levels that were being emitted. Radio frequency is associated with the electromagnetic spectrum and the spreading of radio waves. When the RF current is sent out it releases an electromagnetic field which goes through space allowing wireless technology to work. The units of the RF meter was amperes per meter which is a unit of magnetic field strength. The wi-fi app records the average rate of data that is being transferred on different wifi networks. This was measured in megabits per second which is one million bits per second with bits being a unit of data. The higher the megabits per second, the faster the connectivity.

How does our project fit in with current science/technology?

The world today is a digital one where nearly everything is run by technology. The world is constantly changing and with it, technology is advancing. Because technology is so fast-paced, it is important to have a reliable connection and wifi allows for this connection to occur. Wifi is an important aspect of our everyday life, especially as college students. Current science and technology are constantly looking for ways in which to improve the things we already have. Our project begins to do this in the way that we analyze wifi signal and strength. With the acquired knowledge, we hope to elucidate to the rest of Vassar’s population of the best place to expect reliability and strength of a network. Even more, interestingly, our project fits perfectly with Vassar Urban Enrichment’s initiative to improve wifi quality on campus. With our data (despite its limitations), they would be able to gain a more quantitative aspect to where wifi improvement should be localized as opposed to their more qualitative approach.

What would you do differently if you had to do this project again?

If this project were to be repeated we would pick more locations for our data. Although our data is extensive as it because it represents some of the more used spaces on campus, it would be more beneficial to the student body if we also investigated the wi-fi signal and strength in more locations such as within residential spaces and more academic buildings. Surveying more locations would expand our data, allowing us to notice trends more clearly, perhaps a certain area on campus gets better wifi signal than others or maybe dorms have the best signal of all because it’s where students are expected to spend most of their time. It would also be advantageous if we used equipment that measured the data on the same scale. This way, we would not be observing essentially two different variables, but instead, we could accurately compare and contrast power and strength on their own, independently. Another variable that we would change if we had to redo this project would be to control more variables. We would primarily try to control time by taking data at all locations at approximately the same time to ensure that our data is as consistent as possible. By taking data at around the same time of day, we could compare how the signal varies only by location since perhaps the time of day affects it because certain spaces are more occupied at different times.

What would you do next if you had to continue this project for another 6 weeks?

If we found a correlation between the two variables, we would be interested in creating a mathematical formula that explains the correlation. Further, we would look into examining more correlations or the lack thereof. Another six weeks would allow us to use different equipment in order to find other relationships that correspond to wifi strength and signal. Most, importantly, another six weeks would provide us with a way to decrease variability. This would be done through the use of more data points as well as other locations. This way, we could be more confident in the results that we’ve gathered and the correlations observed. Finally, we would look into collaborating with Vassar Urban Enrichment and their initiative to improve wifi quality on campus.

By Brenda Dzaringa, Esperanza Garcia, and Anya Scott-Wallace