What’s my body doing while I sleep?

As we wrap ourselves in the warm embrace of our blankets each night, we hand over conscious control of our body to our so called “reptilian” and limbic brain regions, and allow biology, under the governance of physics, to steer us through the night. We keep breathing—at different rates at different times—our body temperature remains within the normal range, although it does fluctuate, our brains progress through a sequence of states, each with their own mysterious functions, and our bodies are free to move—as long as our brains aren’t ready to arrest their movement. In sum, our bodies are quite active while we sleep, doing important things to keep us performing optimally while we are awake.

I wanted to learn a little about this behavior, specifically with regard to the muscular movements we make, the temperature changes our bodies undergo while we sleep, and the relationship between these two things. There are actually apps that allow for just this kind of investigation; they fall into the class of sleep-tracking apps, which utilize the sensors found in smartphones to track at least one aspect of sleeping behavior and let you know information about the quality of your night’s rest, such as the proportion of time spent in deep sleep, the number of times you woke up during the night, and even what your snoring sounded like.

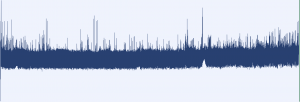

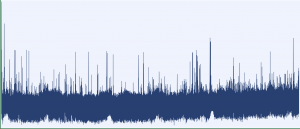

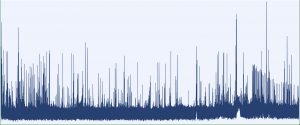

These applications standardly use the accelerometers in smartphones to detect motion in the mattress due to motions of the sleeper. Accelerometers are devices that detect changes in orientation by noticing how the components of the device are reacting to gravity. The reaction of a particular component, typically the directional motion of flexible silicon, triggers electrical currents, which are translated to a signal that can be interpreted by the phone’s operating system. The sleep tracking apps that use this sensor require that the phone be placed on the mattress on which the user is sleeping, so when the person moves, the mattress moves and the app can keep track of the motion. The results of the motion tracking is then represented in a graph which plots levels of motion activity over time. Relatively more motion is interpreted as an indicator of light sleep, whereas very little motion is interpreted as a more restful sleep stage referred to as deep sleep.

I asked two main questions in my experiment:

- To what extent does the motion graphs of two different sleep tracking apps agree with each other, given that they receive the same input of motion data?

- How accurate are the sleep stages graphs in representing actual changes in sleep stages?

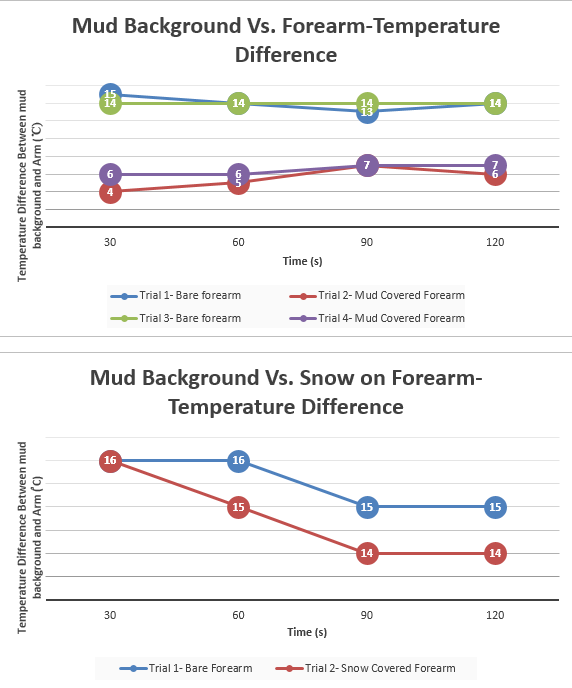

I investigated the first question by running two sleep tracking apps, “Sleep as Android” and “SleepBot,” concurrently while I slept, and later cross checking the peaks in their motion graphs to see what percent of the total peaks showed up in both apps. I attempted to use core body temperature sensing as a measure of the different sleep stages in order to investigate the second question. Core body temperature during sleep is lower than it is during waking hours, and some studies have shown that core body temperature is lowest during deep (Stage 3) sleep, although, this observation has been contradicted by observations in other studies and there was not a consensus on the matter of which I was aware. I collected core body temperature data using a Surface Temperature Sensor, which was taped to a portion of the skin on my stomach area to collect that data while the sleep tracking apps were also collecting their data.

The Surface Temperature Sensor detects changes in temperature by noticing changes in the resistivity—the strength of a material’s opposing force to the flow of an electrical current—of the material that is used for the sensor, which is called a thermistor. The resistivity of the thermistor changes predictably in response to changing temperature conditions, and this makes it possible to use resistivity as a measure of changing temperature. Additionally, the Surface Temperature Sensor’s thermistor is exposed, so it can be used to measure small changes in temperature including that of skin temperature.

I collected data on the two apps and a LabQuest2 device, which interpreted the data from the Surface Temperature Sensor, on five different nights for variable durations of time spent sleeping.

Results

See attachment for motion activity graphs and temperature changes graphs.

| Day |

Total Peaks, “SleepBot” |

Total Peaks, “Sleep as Android” |

% consistent peaks |

| 1 |

27 |

22 |

42/49 = 86% |

| 2 |

26 |

20 |

35/46 = 82% |

| 3 |

21 |

19 |

36/40 = 90% |

| 4 |

26 |

21 |

38/47 = 81% |

| 5 |

22 |

20 |

36/42 = 86% |

Table 1. Comparison of number of motion activity peaks between “Sleep as Android” and “SleepBot” graphs. The average percent of consistent peaks over the five days recording period was 85%.

Table 1 shows that the motion data from the two sleep tracking apps was quite consistent with each other. The average percent of consistent peaks between the two sets of graphs turned out to be 85%. The apps did not present motion graphs that quantified the level of motion activity recorded. “SleepBot” explicitly gave a scale of high, medium and low, whereas “Sleep as Android” did not, but this could be inferred from the shape of the curve. “SleepBot” was also configured to approach the motion data with caution because the app asked to select a level of mattress firmness, and I chose high based on my experience with the mattress on which I slept. This could be a potential confound in this data set because “Sleep as Android” did not prompt me to do the same. This also explains why, upon a qualitative analysis of the graphs, one might say that “SleepBot” is showing that the activity was not as high as “Sleep as Android” was showing.

| Day |

(Time (h), Temperature (⁰C))

|

Sleep Stage |

Activity Level

|

| 1

(Temp. range: 2 ⁰C) |

| (4.4, 34.9) |

| (1.8, 35.1) |

| (2.5, 36) |

| (3.6, 36.1) |

| (5.9, 36.1) |

| (0.9, 36.9) |

|

| Light |

| Light |

| Deep |

| Deep |

| Light |

| Deep* |

|

| Active |

| Active |

| Inactive |

| Inactive |

| Active |

| Inactive |

|

| 2

(Temp. range: 1.1 ⁰C) |

| (1.3, 35.3) |

| (1.9, 35.4) |

| (0.7, 35.9) |

| (2.4, 35.9) |

| (1.6, 36.4) |

|

| Light |

| Deep* |

| Light |

| Deep |

| Deep |

|

| Active |

| Inactive |

| Inactive |

| Inactive |

| Active |

|

| 3

(Temp. range: 1.3 ⁰C) |

| (0.3, 35) |

| (1.4, 35.2) |

| (4.0, 35.2) |

| (4.9, 35.9) |

| (1.2, 36.3) |

|

| Light |

| Light |

| Deep* |

| Deep |

| Light* |

|

| Active |

| Active |

| Active |

| Inactive |

| Active |

|

| 4

(Temp. range: 1.3 ⁰C) |

| (4.5, 35.5) |

| (3.2, 35.6) |

| (6.0, 35.7) |

| (5.7, 36.2) |

| (1.2, 36.8) |

|

| Light |

| Light |

| Light |

| Deep |

| Light |

|

| Active |

| Active |

| Active* |

| Active |

| Inactive |

|

| 5

(Temp. range: 1.1 ⁰C) |

| (1.7, 34.8) |

| (1.1, 35.4) |

| (3.1, 35.9) |

|

|

|

Table 2. Relative maximum and minimum temperatures and corresponding sleep stage and activity level according to “Sleep as Android.” *On the border between light and deep sleep.

Table 2 shows that three out of seven deep sleep instances occurred while there was some amount of movement, and two out of fourteen light sleep instances occurred while there was no movement. Since these are the minority of cases, it seems like deep sleep occurred while the body was not moving much, and light sleep generally occurred while the body was more active. This is consistent with my expectations.

As can be seen in Table 2, except in two cases of deep sleep, one of which was on the border between deep and light sleep, the deep sleep stage occurred while the temperature was between 35.9⁰C and 36.9⁰C, inclusively. The full deep sleep temperature range was from 35.2⁰C to 36.9⁰C. Nine out of fourteen light sleep stages in this table corresponded to a temperature less than 35.9⁰C, the other five were at or above 35.9⁰C, which was the temperature when most of the deep sleep stages began. Light sleep’s full range was from 34.8⁰C to 36.8⁰C. Although the temperature ranges overlap between the two stages, there is one clearly exclusive range: only light sleep occurred when the temperature was lower than 35.2⁰C. This data indicates that light sleep generally occurs while the core body temperature is lower, and deep sleep seems to occur when core body temperature is relatively higher, although the latter is less clear from the data.

This finding is not exactly what I expected, as the background research I did on the topic indicated that core body temperature is lowest during deep sleep; however, I was also aware of some research studies which had found the opposite result, namely that core body temperature was at its highest during deep sleep, which was observed in this experiment. I noticed that the sleep stages graphs from the “Sleep as Android” app were not as precise as they could be because they chunked together motion data in which there were frequent peaks of activity as a large period of light sleep, however, there were also short periods of baseline activity within these ranges, which could have reflected short periods of deep sleep. This treatment of data by the app could be interfering with my interpretation of the stages that actually correspond to the temperatures I was selecting and attempting to code to a corresponding sleep stage. The temperature sensor was taped to my skin to collect its data, and this limited my range of motion while I slept. I was conscious of this fact as I went to sleep, so it might have influenced my resulting motion activity, and it is possible that at least some of my motion behavior was different from what it might have been under normal conditions without the temperature sensor taped to my skin. Overall, core body temperature, if measured in a way similar to how it was done in this experiment might not be a good way to track a sleeper’s progression through the various sleep stages. In addition, I think my experimental design to answer the question of how well the apps are representing actual changes in sleep stages was flawed to begin with since core body temperature is not a well-established means to track such changes.

Science Learned

I learned one exact mechanism through which temperature probes are able to detect changes in temperature, and how this information is able to be represented in real time on devices like the LabQuest2 software. I also learned about a part of my smartphone that I was not previously aware of. Specifically, I learned how accelerometers work on the microscale to enable the screen rotation function of smartphones, and that this data can be harnessed to by apps downloaded on the phone, which is interesting to be because it intersects with my interest in computer science.

Current Technology Connection

There is an abundance of smartphone applications today that attempt to track the occurrence and quality of bodily functions in which humans are interested. There are many fitness tracking apps similar in broad design to that of sleep tracking apps that utilize sensors that are already in phones in a way that is different from its regular function in the phone. Apps are even adding their own accessories to do things like tracking heart rate. This field, of course, is largely dependent on available technologies with which to sense features of the world and features of people. Sleep tracking apps are also connected to the broader field of artificial intelligence which focuses on developing devices that can intelligently sense the environment.

Improvements to the experiment

If I were to do this project again, I would make more of an effort to reduce sources of potential confounds. I would probably set the sensitivity level of the accelerometer to normal on the “SleepBot” app and be more consistent with the placement of the temperature sensor. I would also like to investigate further the role of the circadian rhythm in controlling body temperature and how this interacts with body temperature during sleep. Through the circadian system, body temperature fluctuates throughout the day with temperatures being higher in the morning and lower at night, but I wonder how other activities performed during the day or other processes occurring in the body might affect this normal rhythm. I think it is possible that activities during waking hours could have an effect on what happens during sleeping hours, and I wonder whether this would have any effect of the stages of sleep during the night. This could be tested by observing temperature and motion activity in groups that do different activities during the day, such as physical exercise or lack thereof or even higher or lower stress levels during the day. Additionally, more knowledge about the circadian system’s control of temperature would have helped me to better interpret the temperature data I collected. So, if I had to continue this project for another six weeks, I would gather more data in the way that I have been, but I would also vary waking hours’ activity to test whether there is a consistent effect on sleep stages and temperature changes during sleep.

PHYS-152_Graphs