An Analysis of Rock Salt Runoff

Michael Eacobacci

Introduction

As someone who is interested in environmental chemistry, I designed a project where I could empirically analyze the environmental effects of something that is done every winter at Vassar, salting the roads and sidewalks. After the snow melts the water flows down into rivers and lakes, and it carries the dissolved rock salt with it. This leads to a significantly higher salt concentrations in the water which can be very harmful to many fish/plant species. Over the course of 16 days I took 12 water samples the river running under the bridge building. I was careful to sample from around the same area each time and tried to control as many variables as possible. I then analyzed these samples with a Vernier Spectrometer. I first calibrated the spectrometer with distilled water taken from one of the Chemistry labs. Then, to get each data point, I would shake up the water from a specific day, then dip a cuvette into the holding jar, and run the spectrometer. I analyzed each day’s water two times to insure against a bad sampling.

Results

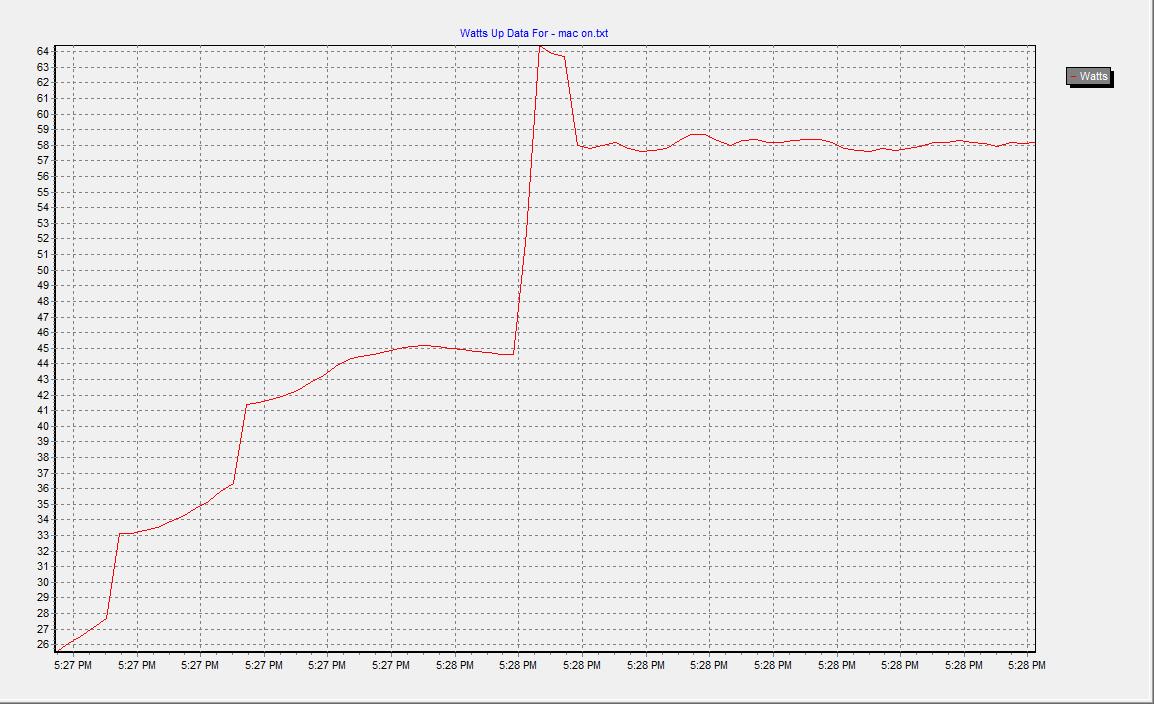

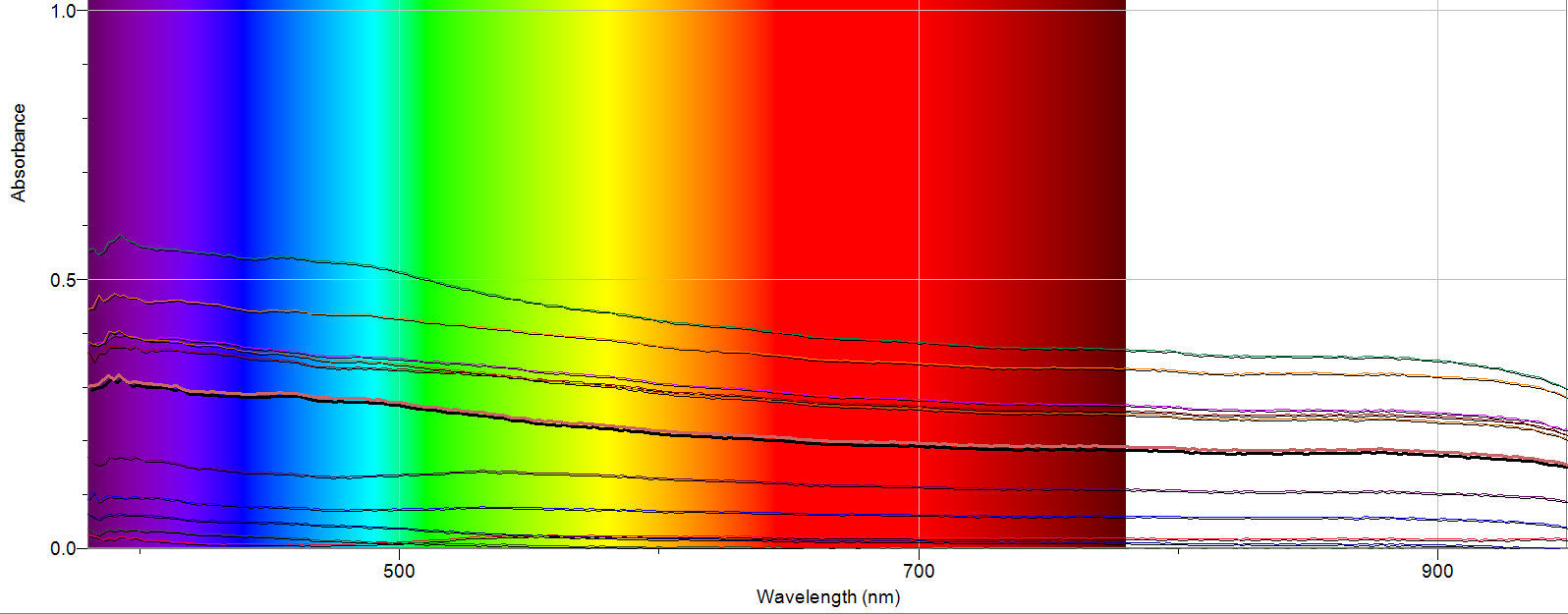

Figure 1. Raw Data from Spectrometer

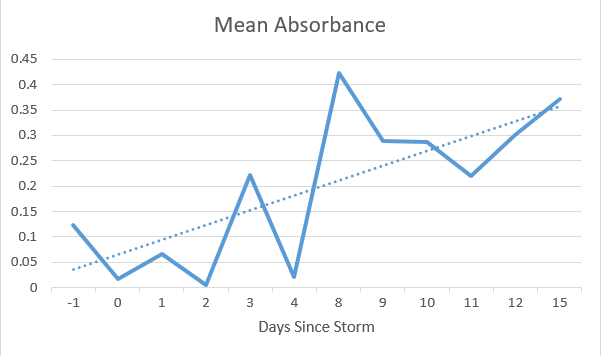

Figure 2. Data organized into a chart of Mean Absorbance vs. Days After the Storm *Discontinuities on the X-Axis are a result of no data being taken on absent days.

Discussion

I am very pleased with the results my study revealed. I saw different absorbance for each sample I took, with the highest mean absorbance being 8 days after the storm, which was around the time the snow was melting rapidly. I also observed a small, but consistent peak at around 390 nm on the readings for days 3, 8, 9, 10, 11 and 15. Upon further research, I found that salt can absorb light at around this wavelength, so this peak could very well be rock salt runoff polluting the river. I would also note there is one line (day 0) has a small, broad peak from 500-700 nm where all of the other lines just sloped down through the spectrum. This could be the result of a sampling error, or something that was only present in the river on that day due to the active snowfall.

Although the data is not perfect, I observed an upward trend in the mean absorption as more days passed after the storm. This suggests that as snow from the storm melts, rock salt and other pollutants are increasing in concentration in the river due to runoff. This supports my original hypothesis that runoff from the snow would carry pollutants into the river.

Other

Science that I learned during this experiment is mainly the inner workings of a UV-Vis spectrometer. It mainly functions by being able to disperse light into its individual wavelengths (380-950 nm) and shooting it through the sample in the cuvette. The detector on the far side gives a reading based on how much light reaches it, which gives us a measurement of how much light was absorbed by the sample and at which wavelength it was absorbed. When I loaded a blank with distilled water, I calibrated the spectrometer to have that be 0 absorbance (or 100% transmittance) and compared the river water samples to that. This allows researchers to determine what compounds are in a sample I also learned about Beer’s law which takes into account cell path length (b) in cm, concentration (C) in mols/L and molar absortivity (e) in L/mol*cm to give Absorbance, which is unit less.

This project fits into current science by exposing a negative environmental impact of our actions, which is a growing concern in the modern scientific community. It is becoming ever more important that we are conscious of the way we are affecting our surroundings, and making changes to lessen our impact.

If I were to repeat this experiment, I would have started taking samples earlier than 1 day before the storm to get a better baseline to compare the changes to. I would also take samples from more than one river so I could draw a more general conclusion, as this one river could be an outlier in either direction. If I had 6 more weeks, I would continue to take these samples as to see when the river returns to its original state, as when I finished, it was still above where it started. I would also try to identify what some of the pollutants are by spiking the samples with different compounds (like NaCl) and seeing where the peaks were enhanced.

Finding the Purest Water Fountain on Campus

Joseph Griffen

For my project I went to decided to examine the water quality of different drinking fountains on campus to see if the water quality across campus was the same or if certain dorms had better or worse drinking water than others. Since it would be difficult to test the quality of Vassar’s water in general, I decided a better approach was to test each dorm’s water quality compared with the other dorms, rather than determining the quality of Vassar’s water as a whole. This means that, while my results can point to one dorm having slightly better water than another, I won’t be making any assertions as to the quality of water at Vassar as a whole. For my experiment I ended up testing the water from 7 different drinking fountains, each from a different dorm. The dorms I chose were Main, Lathrop, Davidson, Joss, Jewett, Strong, and Raymond.

To conduct my experiment I took the water samples and placed them in a spectrometer. This shows how much light from specific wavelengths the water absorbs, which can point to there being minerals or other compounds in the water which may pollute it. If a water sample is absorbing significantly more light of a certain spectrum then it generally means that there is some particle in that water that is causing that increase in absorption. One issue with this project, however, was determining what this increase in absorption means. Some extra particles that absorb light could be perfectly harmless or the difference could be so miniscule that it wouldn’t have any noticeable effect in water quality. On the other hand, the increase in absorption could indicate a contaminant that is affecting our drinking quality, perhaps not enough to make it unsafe to drink, but enough for you to stop and think twice about drinking the water. In order to determine whether certain contaminants are present in the water in significant quantities one would have to do detailed specific analysis into the spectrums of color that these contaminants absorb. This would require different, more precise equipment and testing than was able to be conducted. These results therefore, are intended to be a jumping off point, and can indicate which water fountains may have poorer water quality and which further examination of may be able to indicate pollutants in the water more certainly.

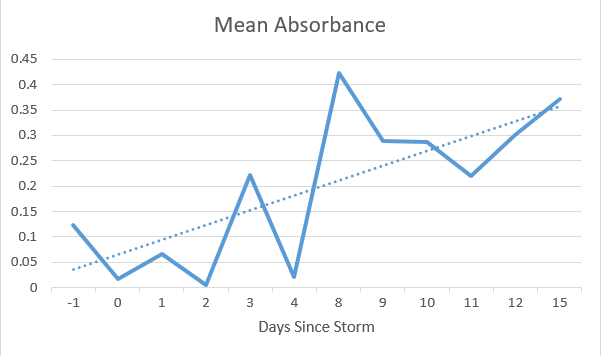

Graph 1: Absorbance vs. Wavelength (with visible spectrum)

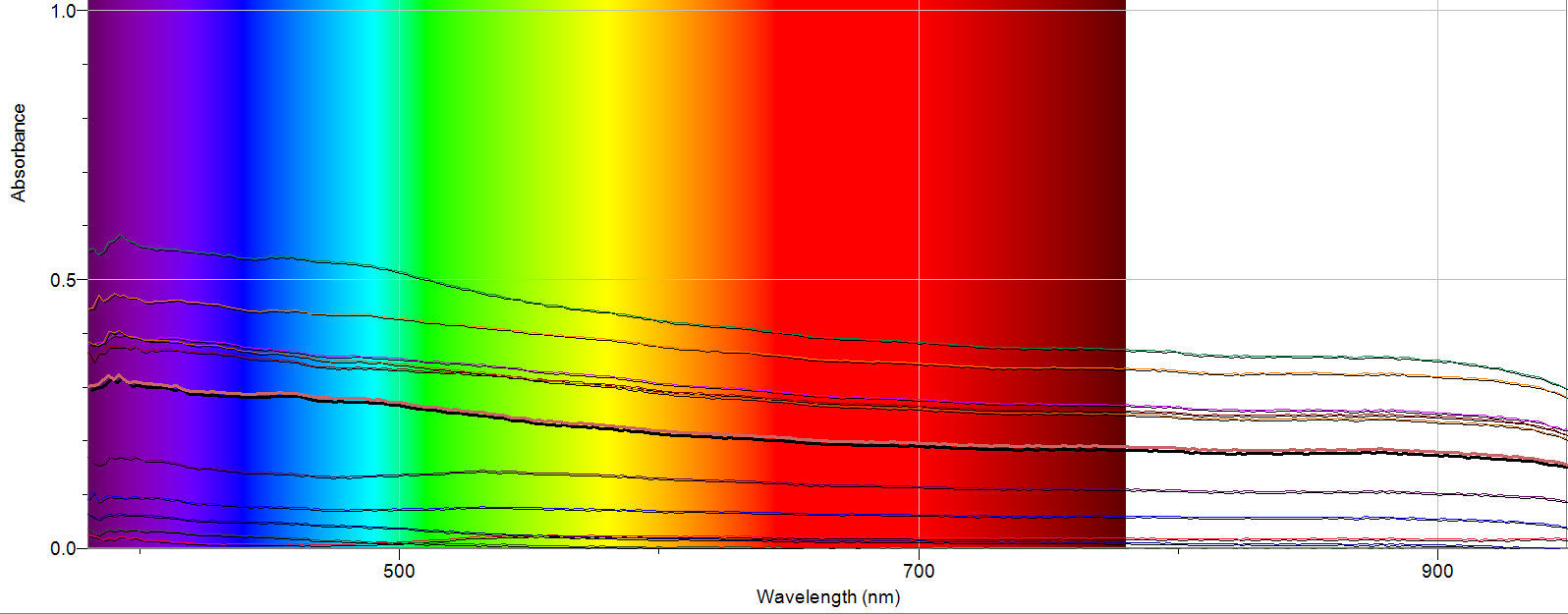

Graph 2: Absorbance vs. Wavelength (without visible spectrum)

Here are two graphs that display the results of the spectrometer. They show each water sample and how absorbent each was with regard to the different wavelengths of light. The first graph shows this information with the visible light spectrum so that one can easily see what the wavelengths correspond to. The second more clearly shows the different water samples so that they can be easily compared. Below is a key that matches the color of the line on the graph to the location the water sample was taken from.

Key:

Strong: Pink

Lathrop: Black (Darker Olive Green in Graph 2)

Davidson: Blue

Raymond: Green

Joss: Maroon

Jewett: Orange

Main: Red

The data clearly shows that there are some significant differences between the water samples from different locations on campus. Initial examination reveals that at some points in the spectrum there is no discernible difference in absorption between any of the samples. For example in the wavelengths of visible light that corresponds to the color red (approximately 600-800nm) there is no significant difference and each sample follows approximately the same pattern. In the spectrum that corresponds to yellow and green light (approx. 500-600nm) as well as most of the infrared spectrum (approx. 800-900nm) the samples split into distinct levels of absorbency. Although there is a little fluctuation between the different samples at other points in the spectrum, we will use these two parts of the spectrum for our comparison because in these places the patterns of the graphs roughly mirror each other which shows that the device is measuring well and the samples are similar, except for that some are absorbing more of the light than others. Additionally these two parts of the spectrum match each other in results and provide clear results that give us a good picture of which water samples are most absorbent.

So, in examining the results we find that we can easily rank the 7 locations in terms of how absorbent they are. The results look like this:

Most Absorbent

- Strong

- Lathrop

- Davidson

- Raymond

- Joss

- Jewett

- Main

Least Absorbent

So, what exactly does this tell us? First, we need to realize the limitations of this analysis. We are simply comparing the 7 water samples against each other, not necessarily against water samples that are drinkable or non-drinkable. Hopefully, we can assume that all of the water Vassar provides us to drink with is of a permissible quality. Still, as the results clearly show, not all the water is identical, and there are obvious variations across campus. Since we are simply comparing the samples against each other a ranking of the 7 is a better way to display the results than figures of their actual absorbency. Indeed, in order to get any figure of absorbency we would have to pick one (or several) wavelengths to examine, and the selections would end up being more or less arbitrary. Therefore, a more holistic examination of the results will yield more relevant information and the ranking of the dorms is the best way to display the results. It is, however, important to notice that there is a significant jump between the absorbency of Raymond and Joss. The rest of the samples are relatively close to each other, but split into two distinct groups. Strong, Lathrop, Davidson, and Raymond are significantly more absorbent than Joss, Jewett, and Main.

Now that we’ve discussed exactly what the results show us we need to know how to interpret them. They show absorbency, but what exactly does this mean in terms of water quality? As I explained earlier, this part isn’t as clear as one would hope. Based on the way the spectrometer works, we know that there are some sort of particles that are more present in the water from Strong than the water from Main. What these particles are is hard to tell though. More careful and precise analysis of the specific wavelengths where we see disparities could perhaps reveal more about which particles are present and whether they are present in large enough quantities to be harmful, or if the particles themselves are even harmful at all. Water quality is difficult to measure objectively and often requires multiple tests to check for different possible contaminants. What we do have evidence of, however, is that there are significant differences in the water across campus. In particular, the evidence suggests that Strong has the most extra particles in it, while Main has the least. Further examination could reveal the nature of these extra particles and whether they are good or bad or neutral, but they are present and their presence suggests that further examination may indeed be warranted.

I would say that the results are roughly what I had predicted. Although I didn’t make any actual predictions about which houses would have better or worse water quality, I expected that I would find significant differences between their absorbency in some spectrums and that in others the samples would yield nearly identical results and this ended up being the case.

The science I learned by doing this project mostly related to wavelengths of light and water quality. I learned a lot about how a spectrometer works and what it can tell us about what is in a liquid. By measuring how much light of each wavelength is able to pass through we can learn a lot about the makeup of a substance. Since certain particles in water are known to absorb certain wavelengths of light, knowing which wavelengths are absorbed more significantly can indicate the presence of different materials. It’s interesting to see how something like light can tie into water quality. The two seem like fairly separate fields, so it’s interesting to see how they can overlap like this. It’s also neat to see how we can use light to detect the presence of different particles.

Additionally the application of this for making water quality testing easier is interesting. By using these comparatively simple means of analyzing the contents of water samples, scientists can save significant amounts of time and resources on more complex methods of detecting contaminants in water. Spectroscopy is a promising tool for analyzing water quality that hopefully can contribute to improvements in water quality across the world.

If I were to do this project again I would conduct further research into spectrometers and how they can be used to analyze water quality. I would find spectrometers that can specifically analyze spectrums that correspond to contaminants commonly found in drinking water. By focusing precisely on these spectrums I could check for the presence of contaminants in our drinking water and get more specific results on the overall quality of drinking water at Vassar. By focusing specifically on drinking water and checking for specific contaminants, I would be able to more make concrete conclusions about the quality and how safe it is for drinking instead of just comparing the samples against each other.

If I were to conduct the experiment for another six weeks without significantly changing my approach in the way I discussed in the previous paragraph, I think I would just focus on increasing the sample size. I only took one sample from each dorm and if I took five or ten from different drinking fountains in each building and averaged them I could gather more evidence about the water quality in the entire building as a whole. I would also expand the experiment to the rest of the houses and possibly other buildings on campus. An expanded survey of water quality could yield more interesting results and analysis (i.e. is the water quality better in dorms than in academic buildings?) However, before I expanded the experiment I would want to make sure to work out more precise measurements which would allow me to make better conclusions about what the results can actually tell us about the water quality as I’ve discussed several times already.

In conclusion, while this experiment encountered some errors regarding how much the results can actually tell us about the water quality itself, I think it was successful in providing evidence that there are significant differences in water quality in different places on campus. Moreover, it was able to suggest the water quality was perhaps better in some locations (like Main) than in others (like Strong). While these results will remain inconclusive in exactly how good the water quality is they do point to something that should be explored. Further, more precise research into the water quality at Vassar would be interesting. In particular, as this experiment showed research into the differences in quality between locations yields interesting results that should be explored further.