My Computational Physics final project models fluid flow by relying on the analogous relationship between Electric Potential and Velocity Potential as solved through Laplace’s Equation. I used the Gauss-Seidel Method to model velocity/electric field changes using vectors that correlate to changes in velocity/electric potential which depend on the points proximity to metal conductors/walls of pipes.

Background

Why Fluid Dynamics?

My interest in investigating Fluid Dynamics stemmed from a lecture given on campus in early February by Dr. Kristy Schlueter-Kuck, a Mechanical Engineer whose research focuses on the applications of coherent pattern recognition techniques to needed fields to aid in solving a variety of problems. One example of this showed the application of these techniques onto devices that aid in the study of ocean surface currents and allowed for more accurate modeling of fluid dynamics. I found this topic to be particularly fascinating since fluid dynamics is a type of mechanical physics that we do not have a chance to explore in our curriculum and for the simple fact that modeling invisible interactions is always a cool topic to explore.

Modeling Fluid Flow

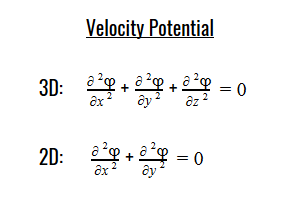

In completing research about Fluid Dynamics, I gained a better understanding about the physics behind Fluid Flow and was able to study the relationship Fluid Velocity had to Laplace’s Equation and how Velocity Potential obeys this equation under ideal conditions.

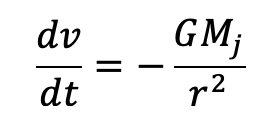

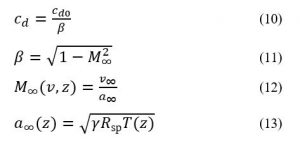

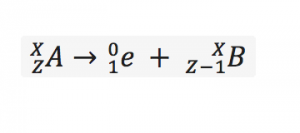

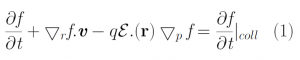

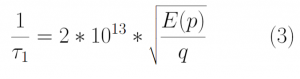

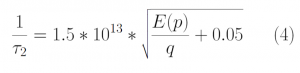

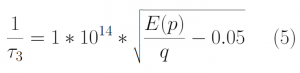

Laplace’s equation states that the sum of the second-order partial derivatives of a function, with respect to the Cartesian coordinates, equals zero:

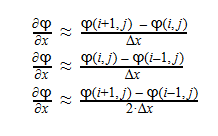

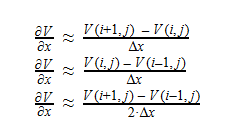

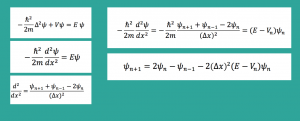

Using Laplace’s Equation, we can move toward solving for the Velocity Potential. In order to create the equation to solve for the Velocity Potential, we must first determine the first-order derivative of each function. The first derivative is essentially the change in potential with respect to the appropriate Cartesian coordinate, which in this case is x-coordinate. It can be written in a variety of ways as seen below:

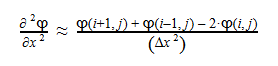

The second-derivative can be expressed as follows:

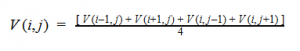

By plugging the second-derivative for each Cartesian coordinate into Laplace’s equation, we can find the equation to solve for the Velocity Potential. This equation can be simplified by assuming that delta_x=delta_y=delta_z. It tells us that the value of potential at any point is the average of its neighboring points.

In class, we studied Laplace’s Equation in Chapter 5 of the textbook as it applied to Electrostatic conditions where we were solving for Electric Potential and the Electric Field instead.

By solving for the Electric Potential in a similar fashion to how we solved for the Velocity Potential, we can see how these potentials are algebraically the same.

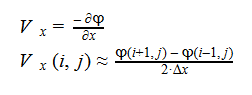

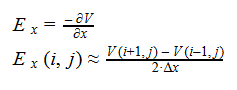

Additionally, we can find an analogous relationship between the negative derivative of each potential. The negative derivative value for the Electric and Velocity Potential for each Cartesian coordinate is equivalent to each respective component of the Electric Field and Velocity.

Since we know that both Velocity Potential and Electric Potential similarly obey Laplace’s Equation, and that there is an analogous relationship between Fluid Velocity and Electric Field, I thought it would be interesting to use this relationship to model Fluid Flow through the application of the Gauss-Seidel method, a method we also covered in Chapter 5 of our textbook.

Gauss-Seidel Method

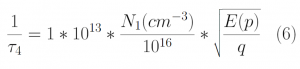

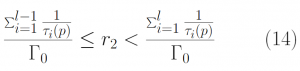

Chapter 5 teaches us about both the Jacobi and Gauss-Seidel Methods in the context of the Relaxation Method where both techniques allow us to computationally converge the potential at each point by averaging the surrounding values of its four neighbors, with the Gauss-Seidel storing the calculated values to inform further averages. We are able to use Laplace’s equation to solve for the potential at each point and utilize these methods to model the converging potentials, assuming we only know the boundary conditions.

By focusing on the Infinite Parallel Plates example and unpacking the mechanics behind each Method prior to the start of this project, I was able to achieve a better understanding of how the potentials were calculated and gained the tools to apply the Gauss-Seidel to more complicated scenarios.

Applications

My initial project proposal intended to explore fluid flow through the scenario of blood flow through veins in the body. I would have then explored cases that placed obstacles feigning pieces of cholesterol on the walls of the veins or some placed in direct path of the flow to model the impact these factors would have on the velocity vectors inside the vein. As my project developed, I realized that restraining my modeling scenarios around a singular vein/tube was limiting the amount of scenarios that the Gauss-Seidel Method could model, as well as under utilizing the analogous relationship between the Electric and Velocity Potential that I additionally had wanted to explore.

I then decided to recreate several of the Electric Potential/Electric Field cases demonstrated in the textbook and from there, analyze the figure and determine its analogous relationship to fluid flow. It is a direct way of making connections between the Electrostatic and Fluid Dynamic analogous relationship as supported by their reliance on Laplace’s Equation. Although it is not intuitive, both the Electric and Velocity Potential are solutions for Laplace’s Equations and therefore yield the same results under the same boundary conditions. I used figures solving for Electric Potential and Electric Field to inform me about the analogous relationship it holds to the Velocity Potential of fluid as well as the changing Velocity values due to the potential changes. I used my previous knowledge of how Electric Field vectors move from high to low potential to simulate fluid flow in pipes of varying geometry. In the last cases I modeled, I added blockages in an attempt to simulate more realistic scenarios for flow in the pipes.

Ultimately, my overall goal for this project has been multifaceted. I planned to investigate the physical phenomenon of Fluid flow and was able to do so by using Laplace’s Equation as the basis for an analogy between Velocity Potential (Fluid Dynamics) and Electric Potential (Electrostatics). I additionally utilized my previous knowledge about the Gauss-Seidel Method and its applications to Electrostatics to apply the method to Fluid Dynamics and gain a better understanding of the aforementioned relationship and its further applications.

Methods

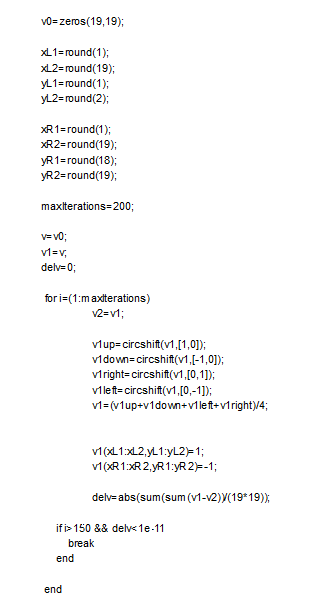

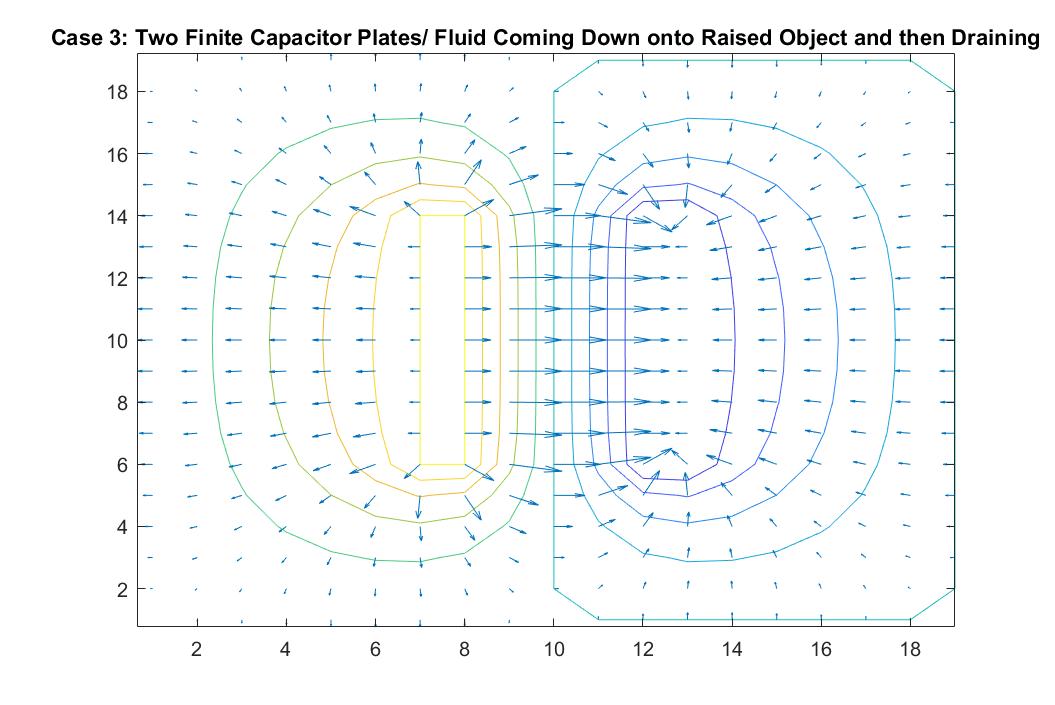

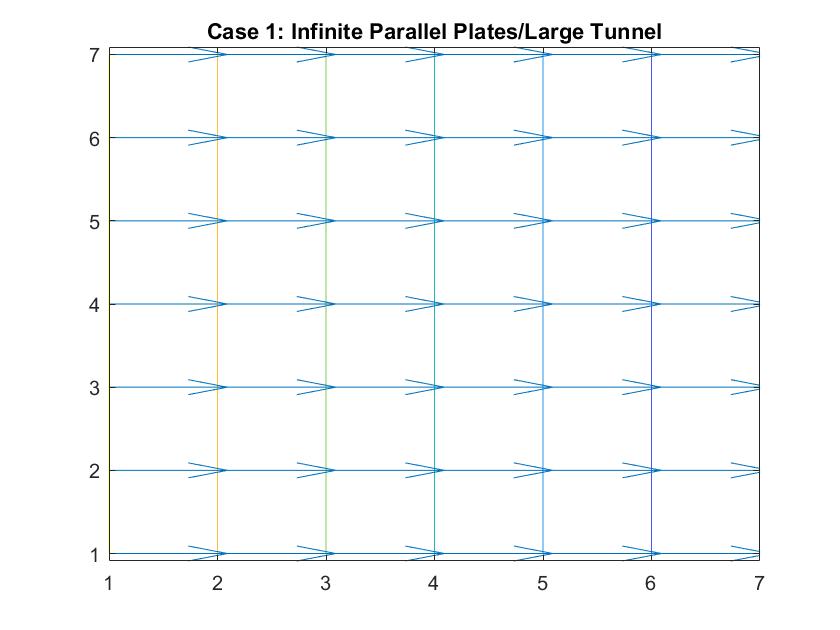

To thoroughly explain my modeling process, I will walk through the steps I took to model Case I: Infinite Parallel Plates/ Large Tunnel. I will showcase both approaches I took and explain some of the choices I made. This ground work allowed me to understand the applications of boundary conditions, how to input potential plates, and how to interpret the figures created and understand the physics behind the arrows and contour lines.

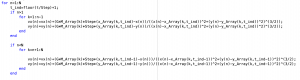

In order to apply the Gauss-Seidel method, I must first create a matrix of zeros. The nature of the Gauss-Seidel method requires us to know all values at all times. This is not merely an application that moves from through the matrix one at a time, we must know the potentials at all values to find the average at any point. Our matrix of zeros is our initial guess.

First Method:

In my first attempt at creating this model, I wrote in the potential for each column between the two parallel plates. This didn’t leave much for the Gauss-Seidel Method to do. I created a for loop that ran 20 times, the maximum number of iterations I called for. DelV allows for the loop to break if the difference between average potential with each iteration, is smaller than the preset amount. I used a 2:6 range for i and j because if it were to start at (1,:) or (:,1), one of the points used in finding the potential average at that point would have a neighboring point of (0,:) or (:,0) which would cause an array error to occur. The for loop goes through all the potential positions for i and j, stores the potential average at each point into an array, and uses Laplace’s Equation to find the new potential average. The contour function plots the change in potential value (equipotential lines) while the quiver function inserts velocity vectors that correspond to the change in equipotential value. The Electric Field is determined using the gradient of the matrix values and the values of which are separated into two components.

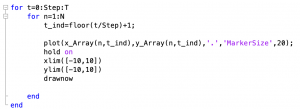

Second Method:

My second attempt at modeling the infinite parallel plates involved a different approach. In my initial conditions, I created each plate by giving its dimensions of length and width and location in the matrix. Inside the for loop I assigned a potential value to the section of the matrix where I had designated the plate to go. Placing it in the for loop ensured that it kept its value during every iteration, enforcing its boundary conditions. I solved for the potential similarly to my last attempt but did it in a way that did not involve going through i and j values individually. I instead accounted for shifts in position that allowed it to look at the neighboring potential values and use that to solve for the average at that point. I also now included an if statement that allowed the for loop to break if the difference between average potential with each iteration was smaller than the preset amount and the majority of iterations were completed. Though not in this image, I still used contour and quiver to model the equipotential lines and velocity vectors.

I found using the second method allowed me to have more control over the matrix in the more complicated models. Not having to figure out the number of i and j iterations that needed to take place for each case that called for different geometries allowed me to focus on playing around with the boundary conditions.

Results

Summary

Part I: Fluid Flow (Over Objects / Drain)

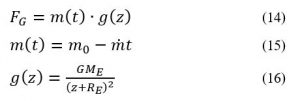

- Case 1: Infinite Parallel Plates / Fluid Flow Through a Large Tunnel

- Case 2: Hollow Metallic Prism w/Solid, Metallic Inner Conductor / Fluid Pouring Down onto Raised Object

- Case 3: Two Finite Capacitor Plates / Fluid Coming Down onto Raised Object and then Draining

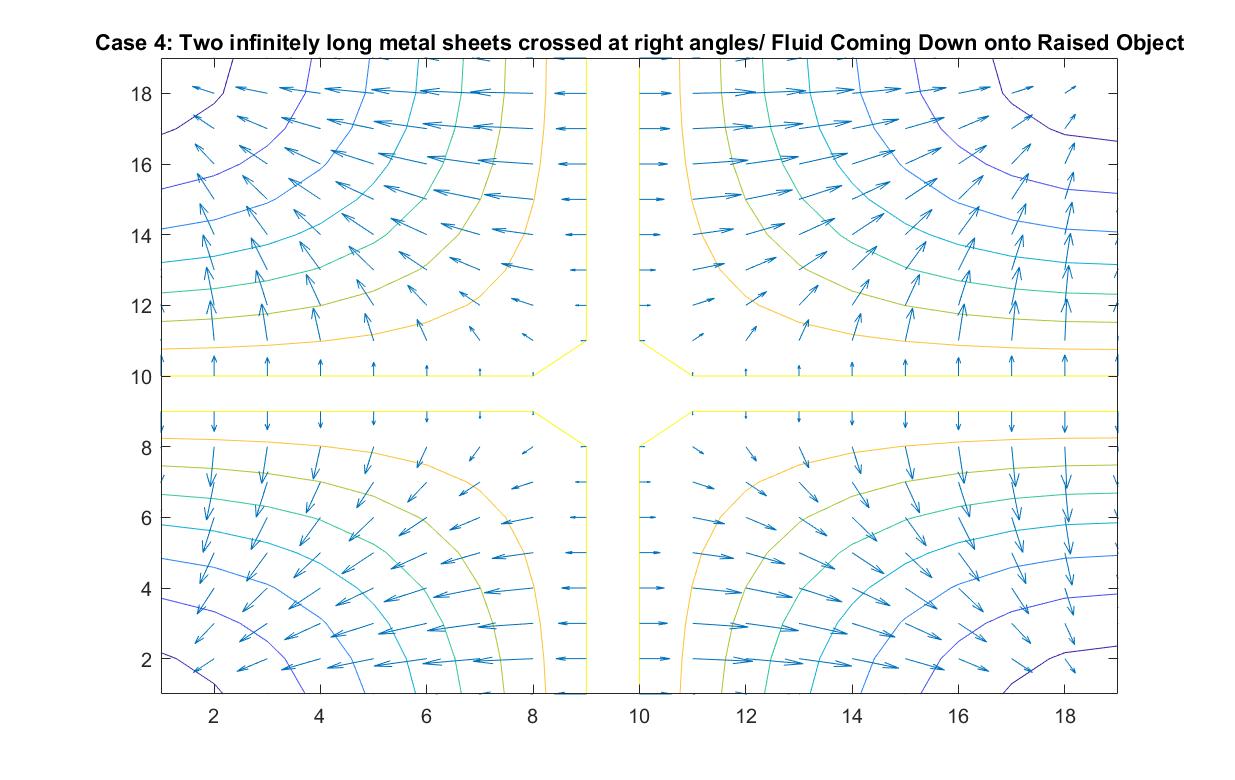

- Case 4: Two Infinitely Long Metal Sheets Crossed at Right Angles / Fluid Pouring Down onto Raised Object

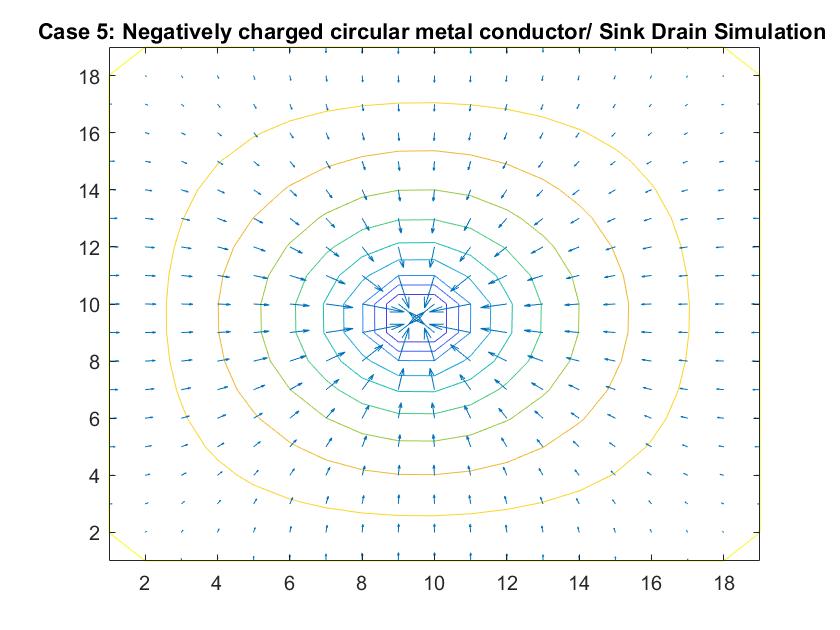

- Case 5: Negatively Charged Inner Metal Conductor / Sink Drain Simulation

Part II: Fluid Flow in a Pipe

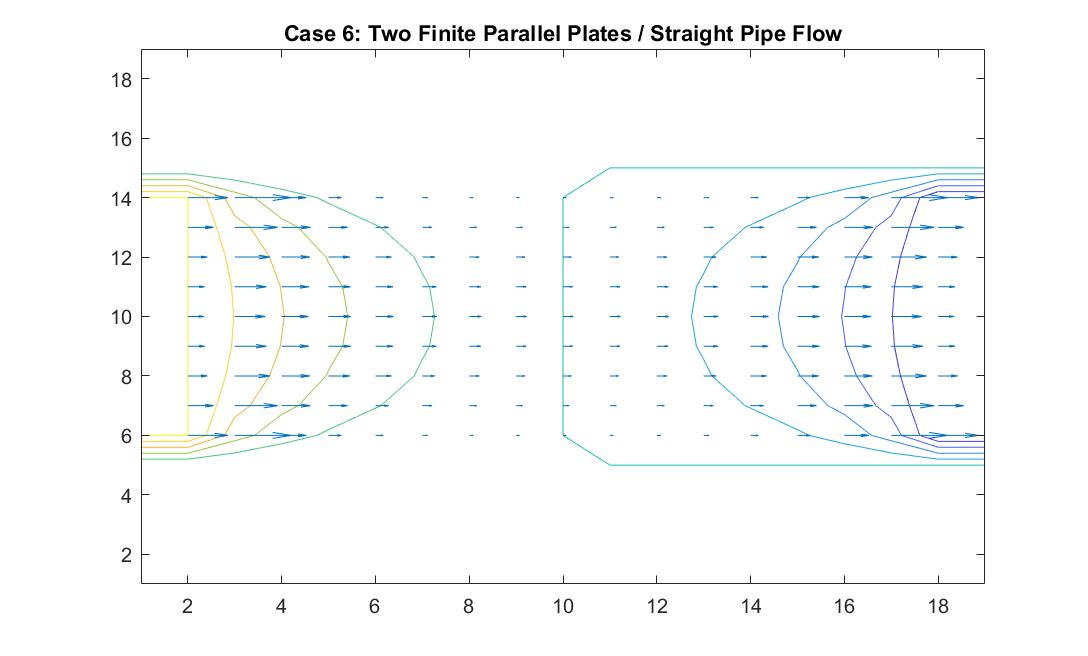

- Case 6: Two Finite Parallel Plates / Straight Pipe Flow

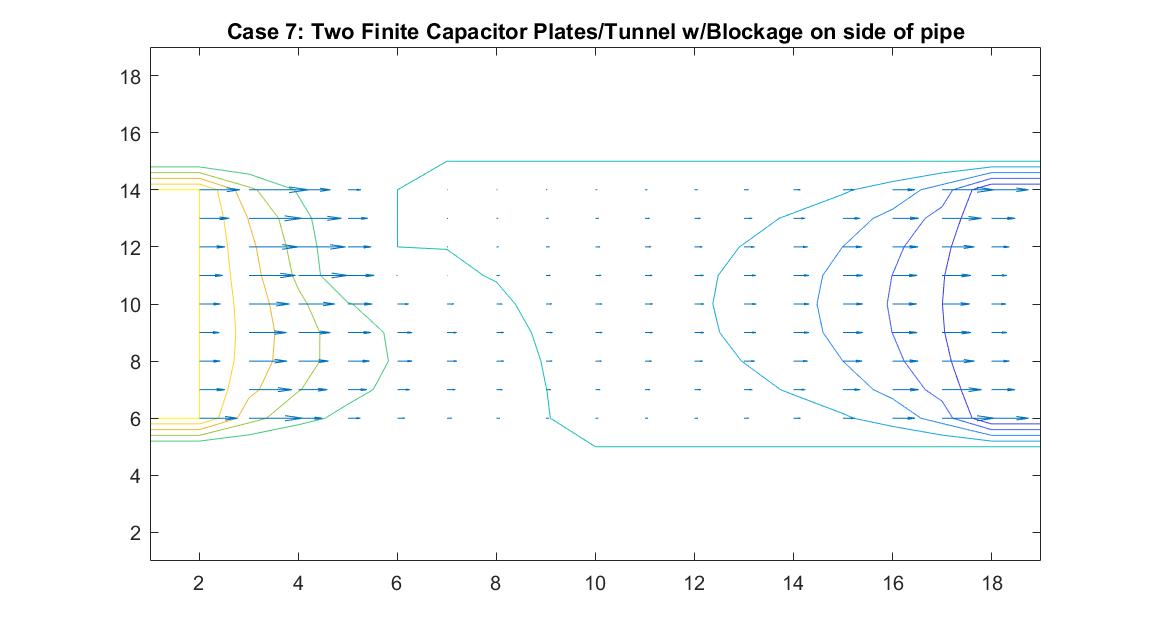

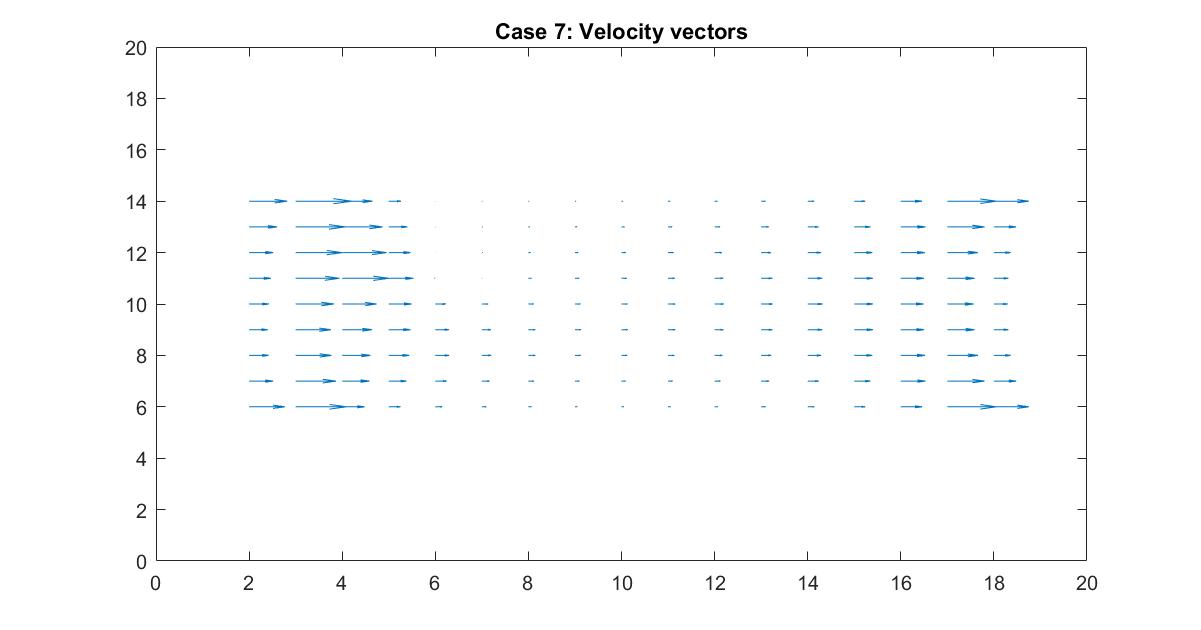

- Case 7: Two Finite Parallel Plates / Straight Pipe Flow w/blockage on Wall of Pipe

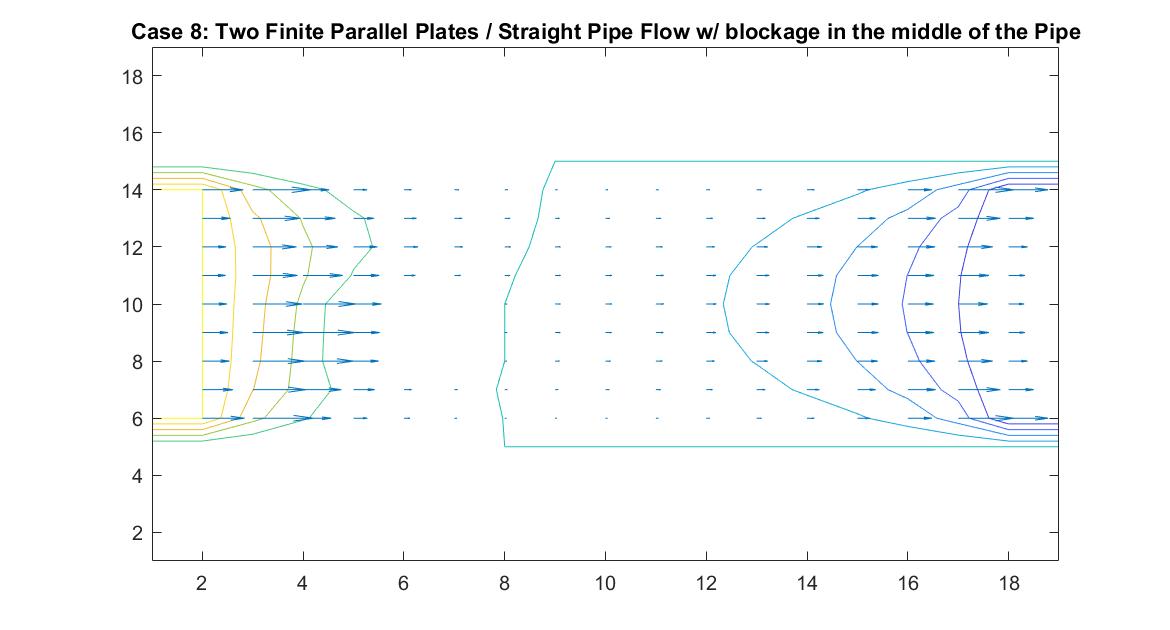

- Case 8: Two Finite Parallel Plates / Straight Pipe Flow w/ blockage in the middle of the Pipe

- Case 9: Curved Pipe or Electric Field through a Tube

_______________________________________________________________________________________________

Case 1: Infinite Parallel Plates / Fluid Flow Through a Large Tunnel

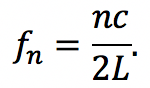

In the graph above, I modeled the behavior of the electric field between two infinitely long parallel plates using Method 1, which I described previously. The left plate had a potential value of 6 and the right plate had a value of -6. The equipotential lines represent where the potential is constant and they are always perpendicular to the electric field. The potential difference between equipotential lines is constant. We can see that the electric field is constant because of the equal distance between each line and because the arrow vectors are all the same length.

Analogous to fluid flow, in large tunnels, the flow is a steady, uniform stream, which is the ideal case to model. Here, the equipotential lines represent the velocity potentials and the arrows represent the velocity vectors of fluid flow.

This graph similarly models electric field/ flow fluid flow through a tunnel but utilized Method 2. I respectively set the potentials for each plate to 1 and -1 and allowed the Gauss-Seidel Method to fill in the potentials in between the plates.

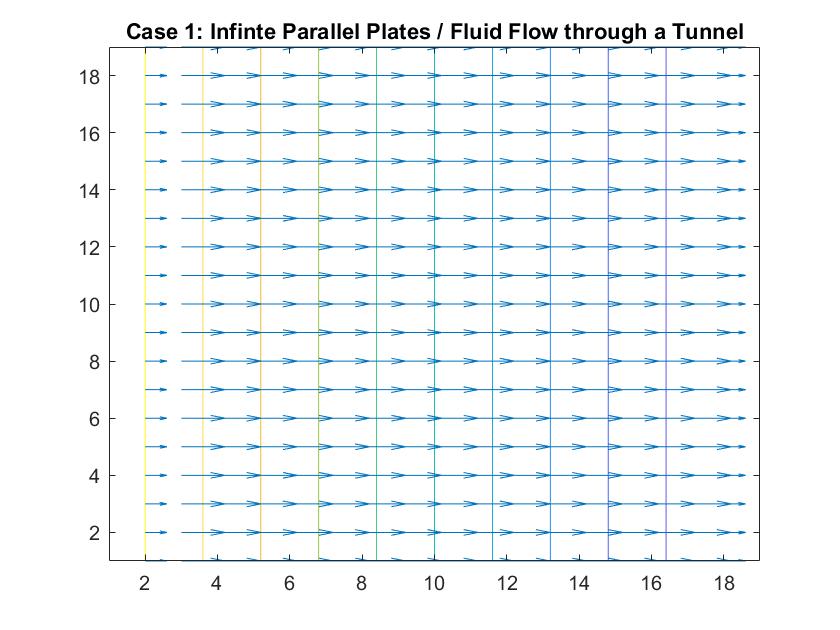

Case 2: Hollow Metallic Prism w/Solid, Metallic Inner Conductor / Fluid Pouring Down onto Raised Object

In the graph above, I modeled the behavior of the electric field around an inner conductor inside a box of neutral charge using Method 1. I set the conductor equal to a potential of 1 and the outer box equal to 0. I included an if statement in my code that prevented the for loop from reading the 1 values of the location in the matrix that represents the potential of the inner conductor when calculating the rest of the potential values of the matrix when using the Gauss-Seidel method. The distance between the equipotential lines represents the strength of the electric field, with the closer lines nearest to the inner conductor being where the electric field is strongest, which correlates to the longer vector sizes. The lines become further apart the further away we are from the conductor and the electric field weakens, but the potential energy is now converted to kinetic energy, which increases the further we go.

In terms of fluid flow, we can imagine the fluid continuously pouring down “into the page”, onto the object the center, which we can imagine is a raised platform and then pouring off the sides into drains at the edge of the box. The equipotential lines show the changing energy / velocity potential inside the fluid flow and the vectors indicate the direction of the fluid flow and the speed of the motion. It can also be described as a steady, uniform flow because even though the fluid is going in different directions, it is consistently and symmetrically flowing in these directions.

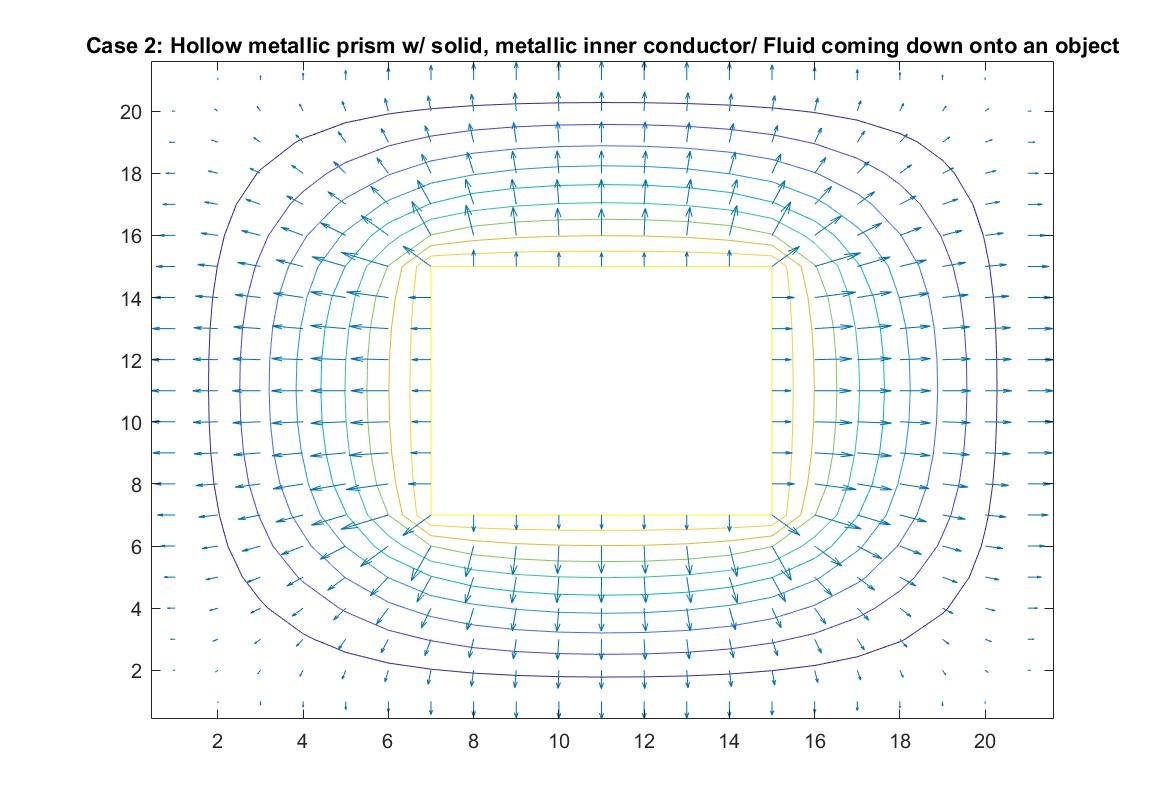

Case 3: Two Finite Capacitor Plates / Fluid Coming Down onto Raised Object and then Draining

In the graph above, I modeled the behavior of the electric field between two finite parallel capacitor plates inside a neutral box using Method 2. I respectively set the potential values for each of the plates to 1 and -1, and reiterated the neutral boundary conditions of the box in the for loop. As expected, the behavior of the electric field vectors will move from the positive plate to the negative plate, with the electric field strongest in between the plates and with additional vectors pointing away from the positive plate and in toward the negative plate. The equipotential lines encircle each plate showing the highest potential values around the positive plate and the lowest around the negative plate. The electric field gets weaker the further it is from the plates as the distance between the equipotential lines increase. The darker color of these lines also correlate to the increase in kinetic energy.

This increase of kinetic energy goes hand in hand with the analogy to my scenario of fluid flow in this scenario. We can imagine the positive plate being a raised object and the negative plate acting as a rectangular drain. The direction of the velocity vectors would then inform us that the fluid that is being poured onto the object is cascading down in the right direction at a high speed into the drain (because of its longer vectors) and that the vectors pointing away from the raised platform are doing so because the fluid is cascading off of it outward, similar to Case 2. We can imagine the drain being a gradual decline, explaining why the velocity vectors grow in length as they grow closer to the drain.

Case 4: Two Infinitely Long Metal Sheets Crossed at Right Angles / Fluid Pouring Down onto Raised Object

In the graph above, I modeled the behavior of the electric field around two infinitely long metal sheets crossed at right angles using Method 2. I set the potential energy value of both plates to 1 because I wanted to the electric field to point away from the sheets, anticipating the analogy I wanted to make to fluid flow.

In the scenario that the metal sheets are a raised cross-shaped object, the fluid ideally will flow down directly onto the cross and cascade down its sides into the concave area between the cross sections. The velocity vectors grow in length as the fluid motion speeds up due to its increase in kinetic energy when it flows down the decline at each of the four corners. The increasing distance between equipotential lines indicate that the electric field is getting weaker the further we get from the metal sheets or analogously that the velocity speed is starting to decrease in value the further it is from the initial cascading event at the raised platform.

Case 5: Negatively Charged Inner Metal Conductor / Sink Drain Simulation

In the graph above, I modeled the behavior of the electric field around a negatively charged circular metal conductor inside a neutrally charged box using Method 2. I set the potential value of the inner conductor to -1 in order to manipulate the direction of the electric field and reiterated the boundary conditions in the for loop. As expected, the electric field grew stronger the closer it got to the conductor, as indicated by how the distances between the equipotential lines become increasingly closer. The electric field lines also grow in length indicating its strength.

Analogously, this behavior can simulate fluid going down a sink drain. Ideally, fluid flows down the curved decline of the sink into the drain at the center of the model, where the kinetic energy is at its peak and the velocity slowly increases as indicated by the growing vector length and smaller distances between velocity potential lines.

_______________________________________________________________________________________________

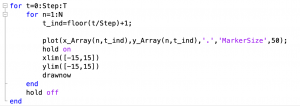

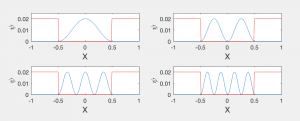

Case 6: Two Finite Parallel Plates / Straight Pipe Flow

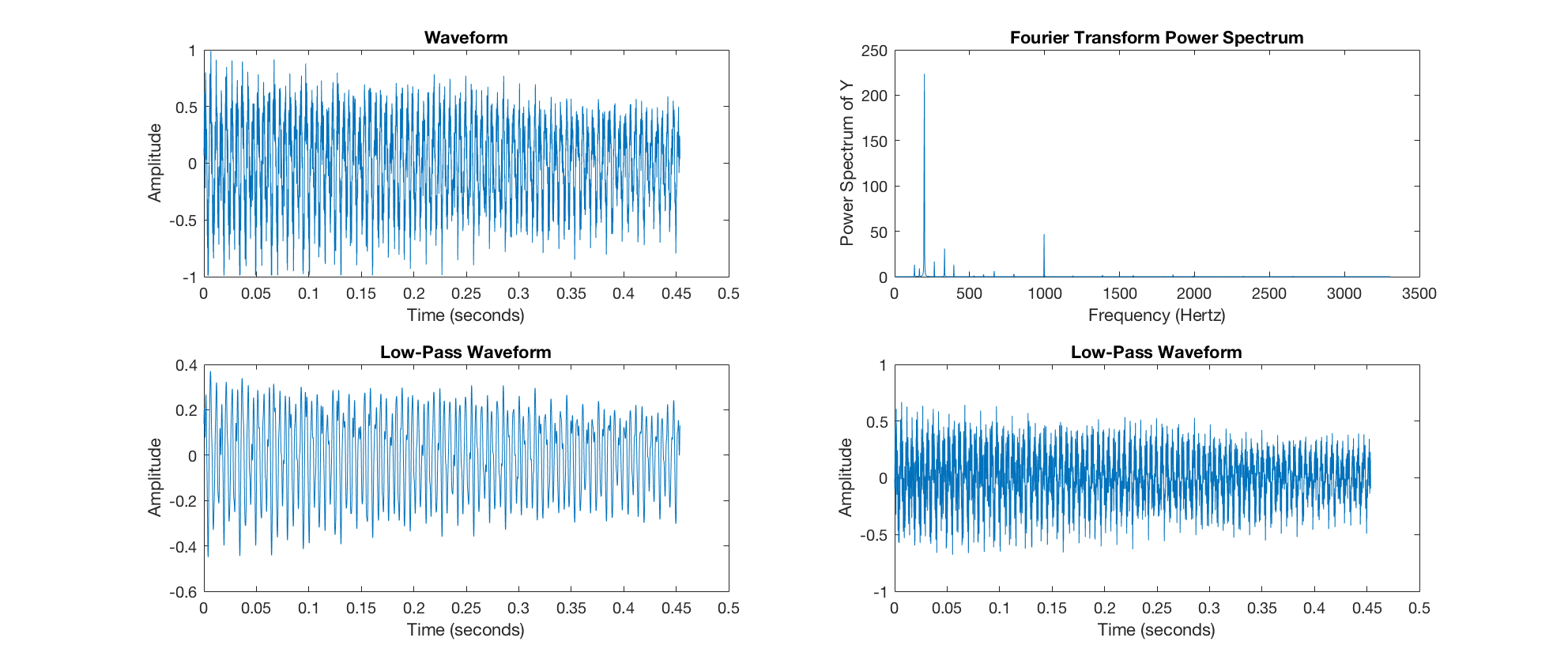

In the graph above, I modeled the behavior of the electric field between two finite parallel plates using Method 2 in order to analogously simulate fluid flow in a straight pipe. I also wanted to eventually model a curved pipe and used this model as an opportunity to build up to that. I used the potential differences between each end of the pipe to simulate the movement of the fluid inside the pipe. I set the left plate/end of the pipe equal to a potential value of 100 and the right plate/end of the pipe equal to a potential value of -100. I reinforced the neutral value of the walls. In comparison to the infinitely long parallel plates I modeled in Case 1, the equipotential lines curved at the ends of the pipe because of the zero potentials at the walls of the pipe. Rather than showing a constant velocity like the ideal model, the model shows a more realistic scenario where the velocity of the fluid flow is quickest at the center of the pipe and slowest the closer it is to the wall where the potential goes to zero. It makes sense to me that the velocity quickens as it reaches the other end of the pipe because of its increase in kinetic energy. This represents a steady, uniform flow.

Case 7: Two Finite Parallel Plates / Straight Pipe Flow w/blockage on Wall of Pipe

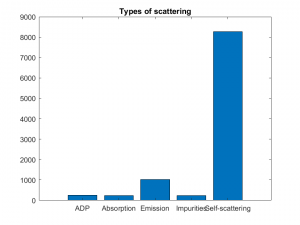

This model is similar to Case 6 but instead tests the behavior of the velocity vectors and velocity potential when there is an object placed on the wall of the pipe, causing a change in the width of the pipe. I utilized Method 2 and kept boundary conditions the same with the walls of the pipe having a potential of zero and having the potential values at the ends of the pipe be equal to 100 and -100. I added a square block with potential of zero to the wall of one of the pipes. I wanted it to be recognized as part of the wall so I set it to the same value as the pipe wall boundary.

The resulting model shows interesting equipotential lines. The long line that follows the edges of the pipe and then goes through the area where the blockage is is representative of a constant equipotential value that curves to show the effects of the non-uniform fluid flow. The equipotential lines are not as perfectly curved as in Case 6 on the left side of the tube because it takes into account the potential of the blockage.

In the 2nd figure for Case 7, I isolated the velocity vector lines in order to highlight the behavior of velocity vectors around the blockage as they approached and moved away from it. Immediately to the right of the blockage there is a small velocity (short vector lines) because it isn’t in the direct path of the source of the flow whereas the left side of the blockage has high velocity (long vector lines) but stops suddenly because it has hit the object.

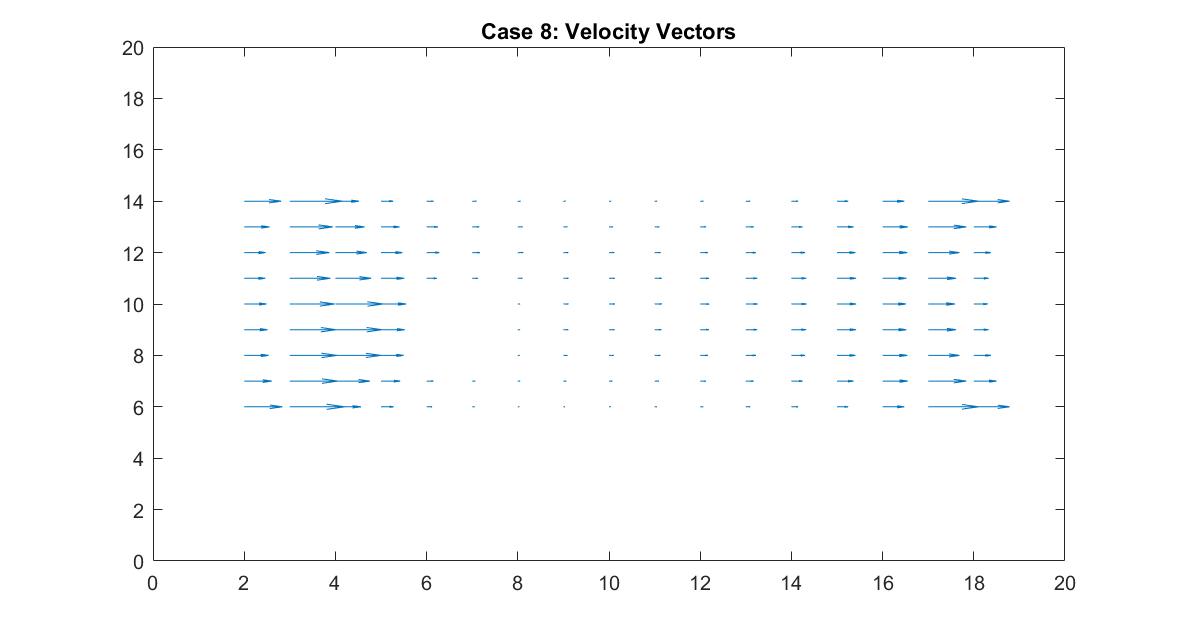

Case 8: Two Finite Parallel Plates / Straight Pipe Flow w/ blockage in the middle of the Pipe

Like Case 7, this model tests the behavior of the velocity vectors and velocity potential by inserting an object inside the pipe but instead places it in the middle of the pipe in the direct path of the fluid flow. I utilized Method 2 and had the same boundary conditions as Case 6 for a straight pipe. I set the potential value of the blockage to zero and reiterated it in the for loop so the Gauss-Seidel Method would recognize the dip in potential value.

The resulting model is similar to Case 7 but because of the position change in the pipe, it has changed its effect on the equipotential lines. The blockage causes the equipotential lines on the left to move closer together and the velocity to increase. As first seen in the example of a straight pipe with no blockages, I set the left end potential equal to 1 and the right end equal to -1. Because of this, the fluid flow at the center of the pipe passes through the potential value of 0. In this example where we have the fluid reach potential values of zero sooner, since it encounters the blockage before the flow reaches the center of the pipe, we notice a faster drop in potential value.

In the 2nd figure for Case 8, I isolated the velocity vector lines in order to highlight the behavior of velocity vectors around the blockage as they approached and moved away from it. It’s effect can only really be seen on the right side of the block where the velocity is much slower because it isn’t in direct path of the fluid flow.

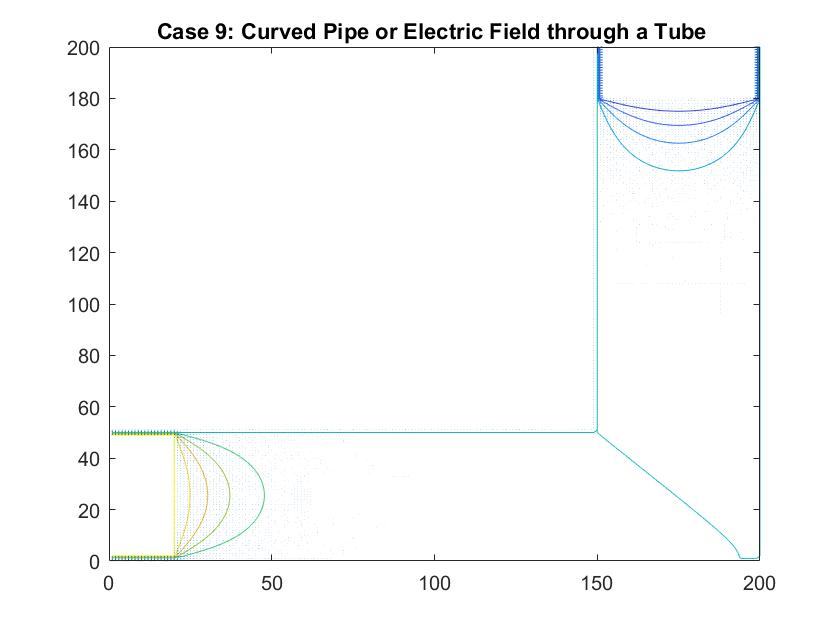

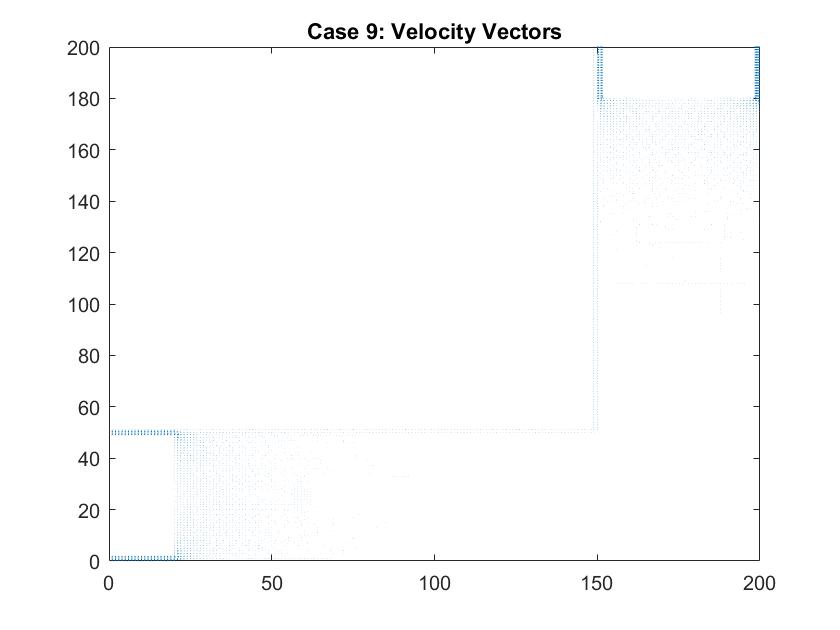

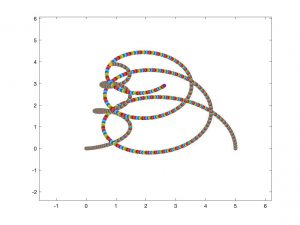

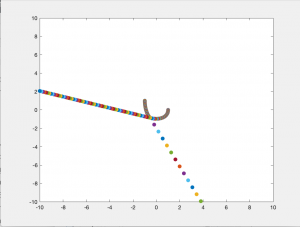

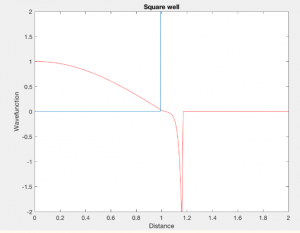

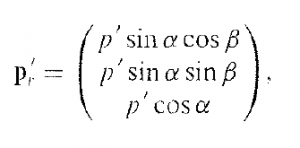

Case 9: Curved Pipe or Electric Field through a Tube

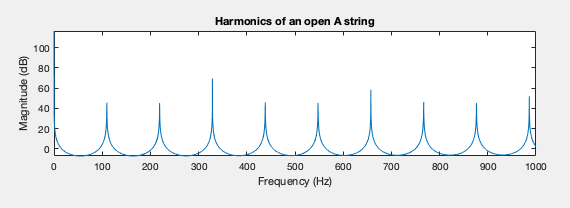

In the graph above I modeled fluid flow through a curved tube using differences in potential values at each end to facilitate movement. I utilized Method 2 of my Gauss-Seidel code to fill in the rest of the potential values inside the tube. I set the four walls of the tube equal to a potential of zero and the ends of the pipe to 100 and -100. There was a much larger cross section of the plot that I needed the Gauss-Seidel to ignore in calculating the potential inside the pipe. After testing out different values, I set the large area outside of the pipe equal to a potential of -10. I chose to do this because this value is relatively far from 0, which is the value for the boundary of the walls of the pipe. Similar to the straight pipe examples, the equipotential lines curve around the ends of the pipe, showing constant potential values. It makes sense that there is one equipotential line at the corner of the pipe at the curve because of the symmetry of the pipe.

In a second figure for Case 9, I isolated the velocity vectors of the fluid flow to highlight the changes in the density of the arrows. Since the dimensions of this plot is much bigger than my previous models of the straight pipe, it is hard to see the differences in the lengths of the velocity vectors. We can assume that areas where there is a larger density of arrows, that there are higher magnitudes of velocity there. Here, they are found around each of the plates which follow the patterns we saw in the smaller scale straight pipe examples.

Conclusion

Through my work modeling fluid flow using analogies between the Velocity and Electric Potential, I was able to apply computational methods I learned in this course to equations we have been working with since Intro Physics as well as challenge myself through an immense amount of coding. Knowing the physics behind the scenarios that I was modeling and knowing what to expect in my results made debugging easier and made the project more rewarding. Also, getting into the practice of manipulating equations to explore different concepts, like how I used my knowledge of Laplace’s Equation and its applications to Electrostatics to explore a different type of Physics of which I had no prior knowledge in, Fluid Dynamics, was very useful.

One of the biggest limitations of my project had to have been the analogy I based this project on. The connection between Velocity and Electric Potential is not intuitive, especially since the way the electric potential is usually derived doesn’t make the relationship so obvious. Also, in modeling fluid flow, I assumed that the only kind of fluid flow is a steady stream without any influence of pressures and outside factors. It is never this perfect but this is something we understand since many models tend to first focus on the most ideal case, which I did for every scenario.

If I were to continue with this project and develop ways to further model Fluid Flow, I would explore other methods that could fill in the missing potentials between our known boundary conditions to see if there are more optimal/accurate ways to calculate them. Additionally, I would try to study the 3D case and create animations that could show the change in vector size as it would move through the simulation.