After some more investigation of our Neural Network model, we have refined our Monte Carlo flipping rules, have stored more than one pattern in our neural network, and have determined that, effectively, the inverse of a certain pattern is equivalent to the initial pattern (they both are energy minima). Before we delve into these results, we will clear up some questions about our original data post.

Outline:

1. Physics from Statistical Mechanics?

2. A Neural Network

3. Monte Carlo Method

4. Project Goals

5. Constant Energy?

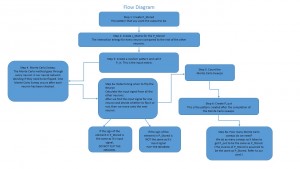

6. Flow Diagram

7. Energy Values

8. Flipping Conditions

9. User Friendly Code

10. Unique Random Pattern

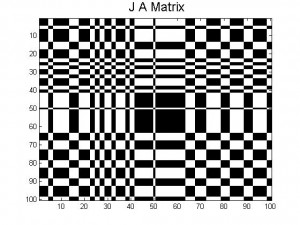

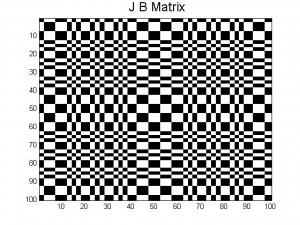

11. J-matrices for A, B, and their average

- Physics from Statistical Mechanics?

The model that we are using, the grid of neurons that are either in position +1 or -1, is very similar to the Ising Model (introduced in Chapter 8 of our text), but with some modifications that make it appropriate for modeling brains. The Ising Model was originally created for studying the magnetic spins of solids and how they interact at different temperatures to explain ferromagnetism vs. paramagnetism, and how those change with phase transitions. In this model, physics from statistical mechanics is used to see how the magnetic spins interact with a heat bath (temperature is non zero), as well as how they interact with each other: sometimes the spins will flip randomly because of heat bath interactions. Our neural network model is essentially the Ising model with temperature equal to zero: our neurons never flip randomly on their own, but only in response to their connections with other neurons. So our model does not use any physics from statistical mechanics, but uses a technique (the Monte Carlo method, which decides whether or not neurons (spins) should be flipped) that is used for many other applications as well.

- A Neural Network

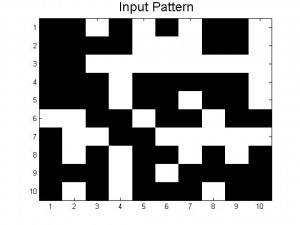

A neural network, in our model, is a grid of neurons (which can have value +1 or -1 only, on or off, firing or not firing), which are completely interconnected (each neuron is connected to every other neuron). The J matrix is where the connections between neurons are stored, and so the “memory” of the system is contained in the J matrix. Patterns can be stored in these neural networks, and, if done correctly, stored patterns can be recalled from distorted versions of them. The way that the neural network travels from the input pattern to the output pattern is via the Monte Carlo method.

- Monte Carlo Method

The Monte Carlo method is essentially just a way of deciding which neurons to flip, the goal, in this case, being to change the input pattern into one of the patterns that have been stored in the neural network. The Monte Carlo method checks every neuron, decides if it should be flipped based on certain flipping rules, flips if necessary, and then goes onto the next neuron. Once every neuron has been given the chance to flip, one Monte Carlo sweep has been done. The details of the flipping rules depend on the model. The flow diagram of our general code (in post below) explains our specific Monte Carlo flipping rules in greater detail.

For our neural network, we want to keep doing Monte Carlo sweeps until the output is equal to one of the stored patterns, which is when we say that the pattern has been recalled. We also want to keep track of how many sweeps it takes to get there, because this is some measure of the speed of the system. The flow diagram again goes into greater specific detail.

- Project Goals

Our goal in this project is to investigate the neural network model presented in the text. First we had to get it working in the first place (meaning we had to be able to store and successfully recall patterns in a neural network), and then we planned on investigating the properties of this model, such as how long memory recall takes for different patterns and different systems, how many patterns could be stored in a single neural network at the same time, how performance of the network changes as more patterns are stored, etc. How many of these questions we will get to by the end of this project is another story, but we will do our best.

- Constant Energy?

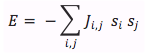

The energy of the system, calculated with Equation 12.14 in the text

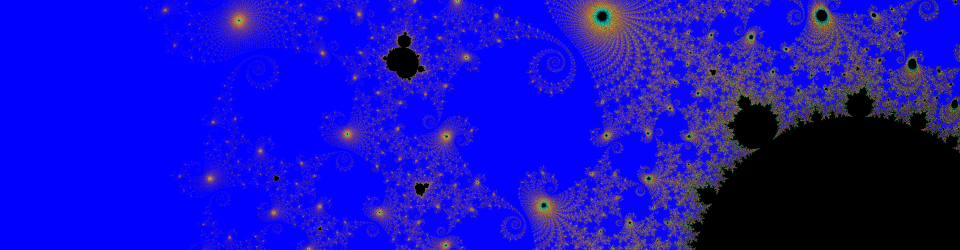

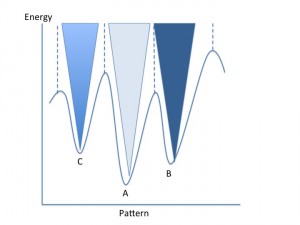

is different for each different state that the system is currently in. The way that the whole neural network stores patterns is the way that the J matrix is created. It is created in such a way that the desired stored pattern(s) are energy minima of the system. Because our Monte Carlo method flips neurons in a way that lowers the total energy of the system, our process should drive P_in towards one of the stored patterns, and stop once it reaches a minimum. Figure 1 (inspired from figure 12.30 in the text) is helpful for visualization of this energy minima concept, and how stored patterns “trap” the Monte Carlo method and prevent the pattern from changing with further sweeps. When the Monte Carlo method reaches one of the minima and finds a stable pattern (which is almost always one of the stored patterns, or its inverse (discussed below)), it cannot escape from this energy minima. If this minima is equal to one of the stored patterns (or its inverse, discussed below), then our code stops and displays the output. When this happens, we say that our system “recalled” the output pattern. We also have a condition that stops our code after a large number of Monte Carlo sweeps (1000) and displays the output, regardless of whether or not recall was successful. This is needed because sometimes the code gets stuck in a shallow energy minimum that is not equal to one of the stored patterns or one of its inverses. In this case. we want to still display our results and see what went wrong (what pattern our neural network got stuck on).

Figure 1: Schematic energy landscape. Each energy minima represents one of the stored patterns. When we give our neural network a specific pattern it produces a pattern with the same or lower energy than the initial pattern.

6. Flow Diagram

The attached flow diagram provides a step by step guide for our code. This diagram also explains how our neural network functions when performing pattern recognition.

- Energy Values

We created a code that calculates the energy value for our stored patterns, their inverse, and a randomly generated pattern. Within this code (Energy Calculating Code) you can determine the minimum energy required to flip the neurons from active to inactive or vice versa within our neural network. We want the minimum energy value because this means that our neural network doesn’t need to exert a lot of energy in order to perform pattern recognition.

The energy minima for the stored pattern is much more smaller than that for the randomly generated pattern. This is so because our stored patterns have an order about them that the random pattern lacks. The energy values for the stored patterns and their inverse is -5000.The energy values for the random pattern is always greater than -5000 and close to but never greater than zero.

Furthermore, because the energy minimums are the same for both the stored patterns and their inverses we can assume that the stored pattern and its inverse are the same. In other words, our code starts of with black-blocked letters and the inverse is white-blocked letters. Although, white and black are two different colors, they represent the same letter, due to both of them having identical energies. This phenomenon occurs because if we distort the stored pattern by 80-90% it takes less energy to get to the inverse image than to the proper image, thus the inverse image is displayed as our P_out. In order to avoid this confusion, we set a condition that changes the inverse image back to the proper image.

Link to code: Energy Calculating Code

- Flipping Conditions

As illustrated in our flow diagram, the flipping conditions determine whether or not the neuron is flipped from inactive to active and vice versa. An input signal is calculated for each neuron and the sign of that input signal is compared to the sign of the neuron. If the signs for both are the same then that neuron is not flipped and remains in it’s current state. However, if the signs are not the same then the neuron is flipped to the state that corresponds with the sign of the input signal.

The input signal is telling the neuron which state to be in. For instance, if the input signal is greater than zero, it is telling the neuron to be in the positive 1 state or if the input signal is less than zero, it is telling the neuron to be in the negative 1 state.

9.User Friendly Code

This code allows for user input to determine which of a variety of input patterns P_in to investigate. The choices for possible input patterns are listed below (and in comments in the code, if the user forgets):

- P_A and P_B are simply equal to the stored patterns

- negP_A and negP_B are the inverses of the stored patterns

- distP_A and dist P_B are distorted versions of P_A and P_B, distorted by an amount input by the user

- Rand is a random pattern of roughly equal amounts of +1 and -1’s

- Rand_weird (half-way pattern) is a specific randomly created matrix that gets our code stuck, and we don’t know why…

Rand_weird is a specific instance of an input pattern where weird things happened, discussed more in the next section.

Link to Code: User Friendly Code

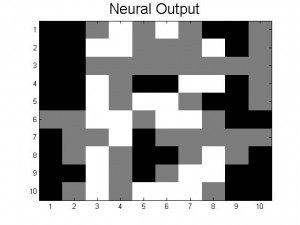

10. Unique Random Pattern

While we were checking our randomly generated code, we ran into one unique pattern; let’s call it the half-way pattern. The half-way pattern can be assumed to be torn between becoming an A or a B. Because of this, no matter how many Monte Carlo sweeps the half-way pattern goes through, it will forever be torn between the two set patterns. The half-way pattern is the only randomly generated pattern, so far, that has an output where the half-way pattern displayed gray blocks; where the gray blocks represent the number 0. Remember the only two states we have for are neurons are positive and negative one. This gray output has an energy minima of -1745.

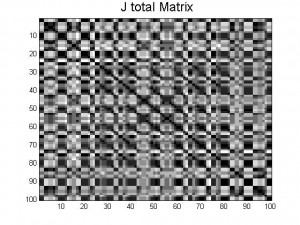

11. J-matrices for A, B, and their average

Here are the J-matrices created by the pattern A, pattern B and then an average of the two. As you can see there are some patterns within each J-matrix, however it is not a reoccurring pattern. This means that there are certain patterns within each section of each J-matrix.

On 2.: It seems that not every neuron is connected to EVERY other neuron since there are different connection pattern. Is that correct?

On 7.: When you say an energy of “-5000” what are your units/reference point?

This is a really nice write up on the basics of neural networks. I am still wondering how and why the Monte Carlo Method works and how the energy state is so low for ordered systems.

This may be unrelated and somewhat random, however, why is it that entropy (disorder) in chemistry always increases and is actually considered a lower state of energy?