Before I comment, let me apologize for getting this done somewhat late. I’m at a conference in Pittsburgh right now and it’s been crazy!

To begin, I found the goals of the project to be quite clear: to perform frequency shifting on recorded or computed sound signals, specifically ultrasound signals. I’ve personally often wondered about this problem, and the ramifications of trying to fix it (i.e. with respect to a record player which, when spinning at the wrong frequency, either plays back sound too low and slow, or too high and quickly).

Next I move to your “Ultrasonic Analysis” post.

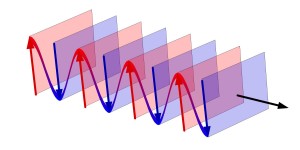

Your explanation of “ultrasonic” is crystal clear, though I suppose the name is pretty straightforward. Next, however, you mention that it is necessary to sample at a rate slightly higher than the Nyquist frequency—why is that? I suppose it’s because you have ultrasonic (above 20000 Hz) signals, but when I first looked through this that fact wasn’t immediately clear. Also, what was, all in all, your higher frequency bound (at least at the outset, before you realized you couldn’t measure ultrasonic sound)?

So you’ve changed from shifting high frequencies down to shifting low frequencies up, since you couldn’t detect ultrasound. Are there any major differences, that you know of, in the two approaches? Is one more difficult than the other? It seems like you have to worry less about aliasing errors in the low frequencies, which is nice. Also, the frequency difference between octaves (or other harmonically “nice” steps) is much smaller than an high frequencies. Does this mean that techniques that preserve harmonic qualities are easier to implement?

Now I’ll discuss your “Preliminary Data” post

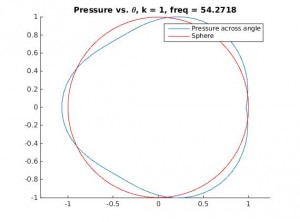

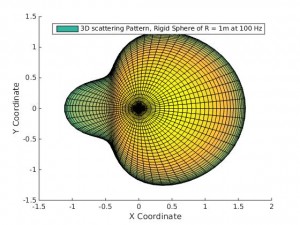

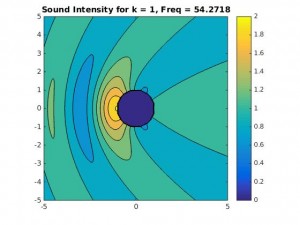

The inclusion of several examples is quite well done here. With respect to audio, it’s not too cluttered, but gives enough context for those who may be unfamiliar with the basic concepts. However, I do think some visual examples could be beneficial. I think that a combination of sight and sound can really make what you’re doing clear to the reader/listener.

You’ve explained up- and down-sampling really well—I’m just curious how the algorithm is put into practice. Did you experiment with removing different points, and compare the results? Would there even be a noticeable difference?

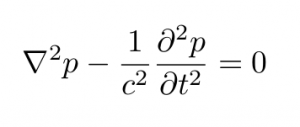

I’m a little confused when you talk about phase shifting… is this just shifting in the frequency domain? I was always under the impression that “phase” had a different meaning for acoustic waves, which is extracted from the imaginary sound data in the frequency domain.

Now I’m discussing your “More Results” post.

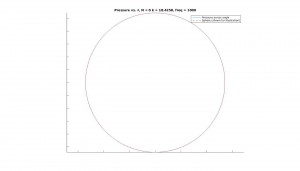

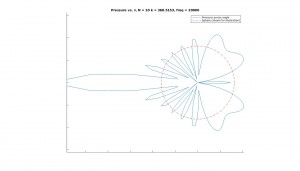

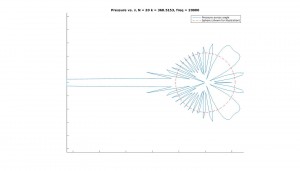

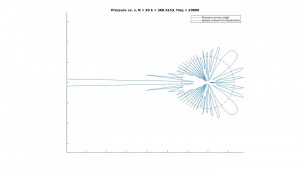

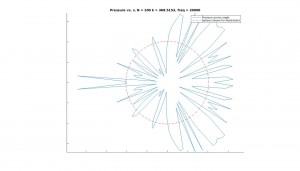

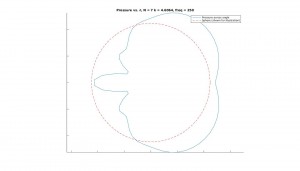

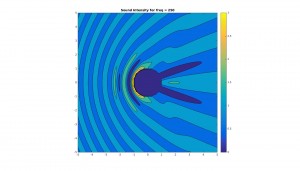

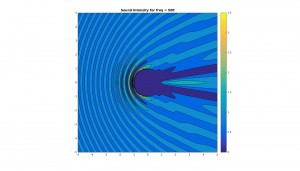

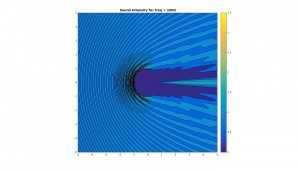

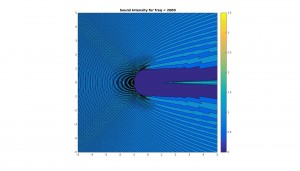

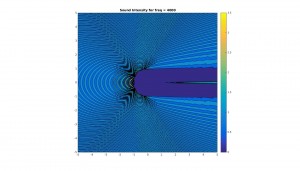

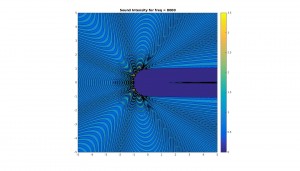

About halfway down, you mention the “harmonic quality” situation—this would be the perfect place for an image (perhaps show it logarithmically so that you don’t bunch up the high frequencies in the graph), to help those of us who prefer to learn visually. Or, perhaps a better way would be to come up with a pretty universally understandable analogy that makes the comparison/conceptualization easier for the viewer. I’m not sure what this would be, but maybe you could think of something.

I like that you again included several sound files, since it gives listeners that perhaps couldn’t perceive differences during your presentation another chance to go back and listen for some subtle differences, especially with regards to harmonics characteristics (or if anyone decided to listen before your presentation).

Now I’ve moved to your “conclusions” post

Again you’ve shifted—back to high frequencies. Why is this?

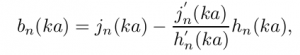

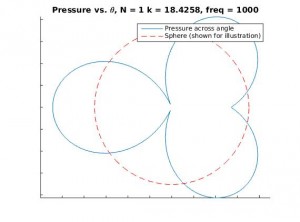

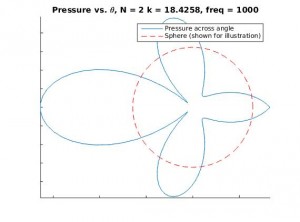

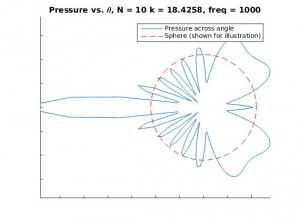

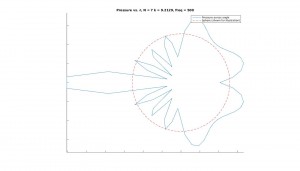

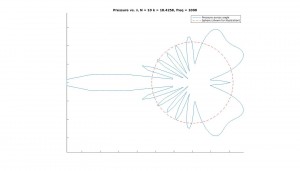

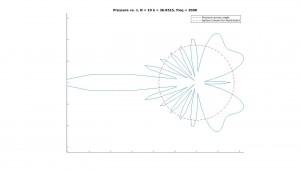

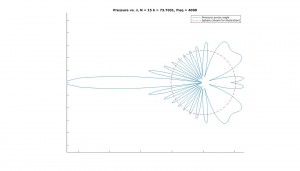

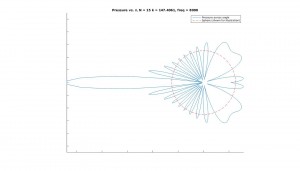

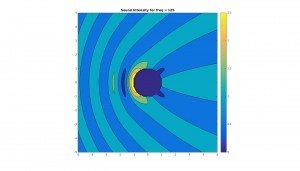

Conceptually, your methodology makes a lot of sense to me; it’s pretty straightforward to imagine what’s going on. However, I’m still a little confused as to the actual algorithm you used. Did you use the downsampling technique you meantioned earlier? It looks like, from your graphs, that you performed a “circshift” in the frequency domain.

You say “rat mediation”… I wonder, did you mean “rat meditation?” Silly rats.

What you mention right before your future work—making an abrasive sound from two sinusoids—is super cool. I’d love to see some results there. Future work sounds cool—so, say you were to mulitply your signal by 2 in the frequency domain. Is that what you mean by “transposition?” Were there any big problems that made it difficult to do this analysis? I imagine that a little more than just multiplying by a constant goes into it.

Other remarks:

I also wanted to mention that during your talk, you mentioned the “harmonic relations” between frequencies, and how such relations (since they are ratios) are not conserved. You explained that this could lead to dissonance and other sort of unpleasant sounds, which I think you demonstrated superbly both with your description and with your audio example. Well done.

Overall, this project was very interesting, and relatively easy to follow conceptually. I appreciate the focus on sound and demontrating sound behavior to the audience. As I mentioned periodically, I think your work could benefit from some more visuals (that is not to say that the images you do have are not good; I think they are actually quite appropriate).