Awardee: Shane Slattery-Quintanilla

Semester of Award: Spring 2020

Materials Awarded: iPad Pro with LiDAR Scanner and Apple Pencil

Project Description:

• Introduction to the technology (excerpted from the original proposal)

The 2020 iPad Pro features a LiDAR (short for “light detection and ranging”) scanner that takes a 3D map of the environment. This scanner combines information with camera and motion data for a wide variety of purposes that are already starting to change the way filmmakers and other media artists work.

• Implementation so far:

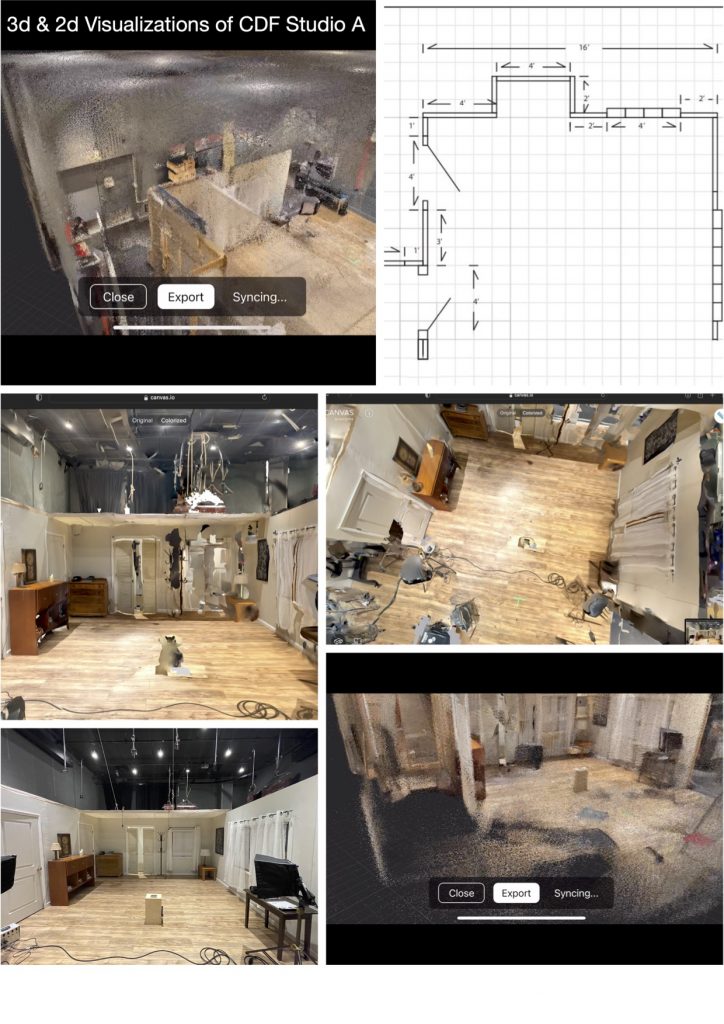

So far the technology has proven especially useful during the stages of pre-production, when I work with students or other collaborators on the visualizations necessary to plan shoots. Some of the elements we typically visualize include the placement of cameras, lights, actors, props (and the movement of those actors and of the camera during a scene). The ability to quickly create an accurate 3D map of a shooting location enhances what might otherwise be only hand-drawn visualizations. For instance, instead of relying only on a 2D overhead sketch of a location, LiDAR imaging, especially on a touch screen device like the iPad, allows us to then intuitively “move through” the 3D image and try out the placement of virtual props/cameras/actors (see attached image for examples of this in the Production Studio space in the CDF). We can even use keyframe animation to virtually block out different potential movements by actors or by the camera. Because the iPad accepts input via the Apple Pencil, we can also draw elements directly onto the LiDAR-generated images, and I’ve found that this combination of new media imaging with old media sketching opens up a lot of opportunities, especially in these pre-production/development stages when we want to try a lot of different things out. An additional benefit of being able to create 3D virtualizations of shooting locations (both during pandemic conditions and beyond) is that remote collaborators can actively and safely participate in pre-production without being in the same spaces.

• Emerging possibilities/future implementation:

Eventually I want to work more with Film Department students and staff to explore LiDAR-enhanced apps and techniques that allow for new and often highly experimental approaches to filmmaking. Some of these techniques include: combining video with augmented reality (“AR”) imaging or other kinds of CGI that are mapped in real time onto otherwise conventional video data; and/or capturing “volumetric” video that incorporates 3d data into 2d video. So far I have tried using some existing AR apps to see what this might look like, but most of these are not specifically designed for this purpose. I have been talking with some developers, such as those at DepthKit (https://www.depthkit.tv) about what solutions might be available to me as an educator or beta tester, and I’ve also downloaded and begun to explore the AR developer tools created by Apple. Although I don’t expect to master these more advanced tools, I hope that gaining some familiarity with them will help me communicate to those at DepthKit or elsewhere the kinds of approaches I am imagining might serve my creative and pedagogical goals.

•What other faculty/staff/students might find useful about LiDAR-enabled tech like the iPad:

I think colleagues in Drama who work in set building and design might find these 3d imaging tools useful and I look forward to checking in with them about those possibilities. Certain faculty/staff/students in Studio Art, including Video Art, and in Media Studies would also likely find interesting uses for this technology. In terms of particular courses (outside of my own), I think there might be ways to design exercises/assignments for MEDS250 Exploratory Media Practices that make use of this and other related AR-ready devices, including the most recent wave of smartphones that include LiDAR sensors.